Does Data Have a Shelf Life?

Organizations are collecting and utilizing a massive amount of data. New research asks the question, “When is the right time to refresh that data?”

Topics

Competing With Data & Analytics

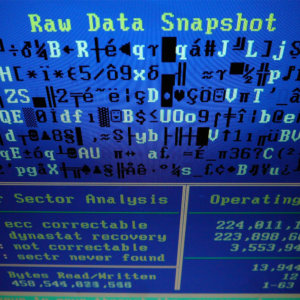

Creating insights from data is an important, and costly, issue for many companies. They spend time and effort collecting data, cleaning it, and using resources to find something meaningful from it. But what about after the insights have been generated? Do insights have a shelf life? If so, when should knowledge gleaned from old data be refreshed with new data?

In their new research paper, When is the Right Time to Refresh Knowledge Discovered from Data? Xiao Fang and Olivia R. Liu Sheng of the University of Utah, in collaboration with Paulo Goes of the University of Arizona, suggest that for real-world Knowledge Discovery in Databases (KDD) — applications like customer purchase patterns or public health surveillance — new data is imperative:

It could bring in new knowledge and invalidate part or even all of earlier discovered knowledge. As a result, knowledge discovered using KDD becomes obsolete over time. To support effective decision making, knowledge discovered using KDD needs to be kept current with its dynamic data source.

However, staying current with data sources is a fundamental challenge, rated as one of the top three management issues cited by knowledge management practitioners, according to the researchers:

A trivial solution is to run KDD whenever there is a change in data. However, such solution is neither practical, due to the high cost of running KDD, nor necessary, since it often results in no new knowledge discovered. On the other hand, running KDD too seldom could lead to significant obsoleteness for the knowledge in hand. Therefore, it is critical to determine when to run KDD so as to optimize the trade-off between the obsoleteness of knowledge and the cost of running KDD.

Their solution? Model an optimal knowledge refresh policy.

With research areas spanning machine learning and data mining (Fang), data mining and optimization techniques (Sheng), and modeling of complex production and information systems (Goes), the model developed by the researchers is — for those in management who don’t have a PhD in information systems — hopelessly bound in mathematical equations.

So I asked the authors to explain in lay terms how their research — and model — can help managers decide when the right time is to refresh knowledge discovered from data.

Comments (2)

Bryan Kolterman

Andy Capaloff