Executives Are Coming to See RAI as More Than Just a Technology Issue

A panel of experts weighs in on if responsible AI governance extends beyond technology leadership.

Topics

Responsible AI

In collaboration with

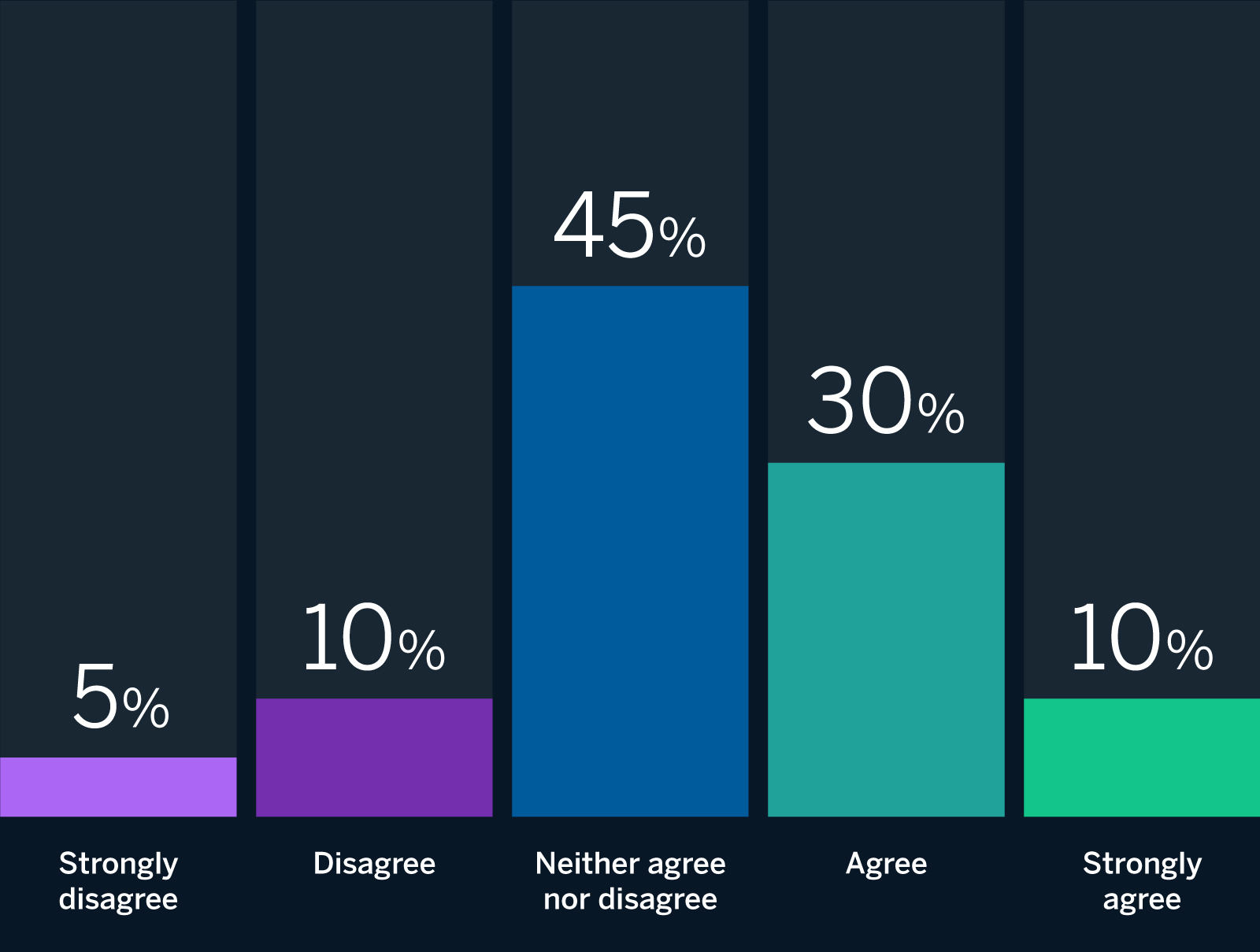

BCGMIT Sloan Management Review and BCG have assembled an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide. This month, we asked our expert panelists for reactions to the following provocation: Executives usually think of RAI as a technology issue. The results were wide-ranging, with 40% (8 out of 20) of our panelists either agreeing or strongly agreeing with the statement; 15% (3 out of 20) disagreeing or strongly disagreeing with it; and 45% (9 out of 20) expressing ambivalence, neither agreeing nor disagreeing. While our panelists differ on whether this sentiment is widely held among executives, a sizable fraction argue that it depends on which executives you ask. Our experts also contend that views are changing, with some offering ideas on how to accelerate this change.

In September 2022, we published the results of a research study titled “To Be a Responsible AI Leader, Focus on Being Responsible.” Below, we share insights from our panelists and draw on our own observations and experience working on RAI initiatives to offer recommendations on how to persuade executives that RAI is more than just a technology issue.

The Panelists Respond

Executives usually think of RAI as a technology issue.

While many of our panelists personally believe that RAI is more than just a technology issue, they acknowledge some executives harbor a narrower perspective.

Source: Responsible AI panel of 20 experts in artificial intelligence strategy.

Responses from the 2022 Global Executive Survey

Less than one-third of organizations report that their RAI initiatives are led by technical leaders, such as a CIO or CTO.

Source: MIT SMR survey data excluding respondents from Africa and China combined with Africa and China supplement data fielded in-country; n=1,202.

Executives’ Varying Perspectives on RAI

Many of our experts are reluctant to generalize when it comes to C-suite perceptions of RAI. For Linda Leopold, head of responsible AI and data at H&M Group, “Executives, as well as subject matter experts, often look at responsible AI through the lens of their own area of expertise (whether it is data science, human rights, sustainability, or something else), perhaps not seeing the full spectrum of it.” Belona Sonna, a Ph.D. candidate in the Humanising Machine Intelligence program at the Australian National University, agrees that “while those with a technical background think that the issue of RAI is about building an efficient and robust model, those with a social background think that it is more or less a way to have a model that is consistent with societal values.” Ashley Casovan, the Responsible AI Institute’s executive director, similarly contends that “it really depends on the executive, their role, the culture in their organization, their experience with the oversight of other types of technologies, and competing priorities.”

The extent to which an executive views RAI as a technology issue may depend not only on their own background and expertise but also on the nature of their organization’s business and how much it uses AI to achieve outcomes. As Aisha Naseer, research director at Huawei Technologies (UK), explains, “Companies that do not deal with AI in terms of either their business/products (meaning they sell non-AI goods/services) or operations (that is, they have fully manual organizational processes) may not pay any heed or cater the need to care about RAI, but they still must care about responsible business. Hence, it depends on the nature of their business and the extent to which AI is integrated into their organizational processes.” In sum, the extent to which executives view RAI as a technology issue depends on the individual and organizational context.

Disagree

An Overemphasis on Technological Solutions

While many of our panelists personally believe that RAI is more than just a technology issue, they acknowledge that some executives still harbor a narrower perspective. For example, Katia Walsh, senior vice president and chief global strategy and AI officer at Levi Strauss & Co., argues that “responsible AI should be part of the values of the full organization, just as critical as other key pillars, such as sustainability; diversity, equity, and inclusion; and contributions to making a positive difference in society and the world. In summary, responsible AI should be a core issue for an organization, not relegated to technology only.”

But David R. Hardoon, chief data and AI officer at UnionBank of the Philippines, observes that the reality is often different, noting, “The dominant approach undertaken by many organizations toward establishing RAI is a technological one, such as the implementation of platforms and solutions for the development of RAI.” Our global survey tells a similar story, with 31% of organizations reporting that their RAI initiatives are led by technical leaders, such as a CIO or CTO.

Several of our panelists contend that executives can place too much emphasis on technology, believing that technology will solve all of their RAI-related concerns. As Casovan puts it, “Some executives see RAI as just a technology issue that can be resolved with statistical tests or good-quality data.” Nitzan Mekel-Bobrov, eBay’s chief AI officer, shares similar concerns, explaining that, “Executives usually understand that the use of AI has implications beyond technology, particularly relating to legal, risk, and compliance considerations, [but] RAI as a solution framework for addressing these considerations is usually seen as purely a technology issue.” He adds, “There is a pervasive misconception that technology can solve all the concerns about the potential misuse of AI.”

Our research suggests that RAI Leaders (organizations making a philosophical and material commitment to RAI) do not believe that technology can fully address the misuse of AI. In fact, our global survey found that RAI Leaders involve 56% more roles in their RAI initiatives than Non-Leaders (4.6 for Leaders versus 2.9 for Non-Leaders). Leaders recognize the importance of including a broad set of stakeholders beyond individuals in technical roles.

Neither agree nor disagree

“The multidisciplinary nature of responsible AI is both the beauty and the complexity of the area. The wide range of topics it covers can be hard to grasp. But to fully embrace responsible AI, a multitude of perspectives is needed. Thinking of it as a technology issue that can be ‘fixed’ only with technical tools is not sufficient.”

Attitudes Toward RAI Are Changing

Even if some executives still view RAI as primarily a technology issue, our panelists believe that attitudes toward RAI are evolving due to growing awareness and appreciation for RAI-related concerns. As Naseer explains, “Although most executives consider RAI a technology issue, due to recent efforts around generating awareness on this topic, the trend is now changing.” Similarly, Francesca Rossi, IBM’s AI Ethics global leader, observes, “While this may have been true until a few years ago, now most executives understand that RAI means addressing sociotechnological issues that require sociotechnological solutions.” Finally, Simon Chesterman, senior director of AI governance at AI Singapore, argues that “like corporate social responsibility, sustainability, and respect for privacy, RAI is on track to move from being something for IT departments or communications to worry about to being a bottom-line consideration” — in other words, it’s evolving from a “nice to have” to a “must have.”

For some panelists, these changing attitudes toward RAI correspond to a shift around industry’s views on AI itself. As Oarabile Mudongo, a researcher at the Center for AI and Digital Policy, observes, “C-suite attitudes about AI and its application are changing.” Likewise, Slawek Kierner, senior vice president of data, platforms, and machine learning at Intuitive, posits that “recent geopolitical events increased the sensitivity of executives toward diversity and ethics, while the successful industry transformations driven by AI have made it a strategic topic. RAI is at the intersection of both and hence makes it to the boardroom agenda.”

For Vipin Gopal, chief data and analytics officer at Eli Lilly, this evolution depends on levels of AI maturity: “There is increasing recognition that RAI is a broader business issue rather than a pure tech issue [but organizations that are] in the earlier stages of AI maturation have yet to make this journey.” Nevertheless, Gopal believes that “it is only a matter of time before the vast majority of organizations consider RAI to be a business topic and manage it as such.”

Agree

“In order to elevate ourselves from viewing RAI as a technology issue, it’s important to view the challenges RAI surfaces as challenges that largely exist with or without AI.”

Ultimately, a broader view of RAI may require cultural or organizational transformation. Paula Goldman, chief ethical and humane use officer at Salesforce, argues that “tech ethics is as much about changing culture as it is about technology,” adding that “responsible AI can be achieved only once it is owned by everyone in the organization.” Mudongo agrees that “realizing the full potential of RAI demands a transformation in organizational thinking.”

Uniting diverse viewpoints can help. Richard Benjamins, chief AI and data strategist at Telefónica, asserts that executive-level leaders should ensure that technical AI teams and more socially oriented ESG (environmental, social, and governance) teams “are connected and orchestrate a close collaboration to accelerate the implementation of responsible AI.” Similarly, Casovan suggests that “the ideal scenario is to have shared responsibility through a comprehensive governance board representing both the business, technologists, policy, legal, and other stakeholders.” In our survey, we found that Leaders are nearly three times as likely as Non-Leaders (28% versus 10%) to have an RAI committee or board.

Recommendations

For organizations seeking to ensure that their C-suite views RAI as more than just a technology issue, we recommend the following:

- Bring diverse voices together. Executives have varying views of RAI, often based on their own backgrounds and expertise. It is critical to embrace genuine multi- and interdisciplinarity among those in charge of designing, implementing, and overseeing RAI programs.

- Embrace nontechnical solutions. Executives should understand that mature RAI requires going beyond technical solutions to challenges posed by technologies like AI. They should embrace both technical and nontechnical solutions, including a wide array of policies and structural changes, as part of their RAI program.

- Focus on culture. Ultimately, as Mekel-Bobrov explains, going beyond a narrow, technological view of RAI requires a “corporate culture that embeds RAI practices into the normal way of doing business.” Cultivate a culture of responsibility within your organization.

“Executives tend to think of RAI as a distraction. In fact, many of them know very little about RAI and are preoccupied with profit maximization. Until RAI finds its way toward regulations, it will remain in the periphery.”