To Be a Responsible AI Leader, Focus on Being Responsible

Findings from the 2022 Responsible AI Global Executive Study and Research Project

Executive Summary

As AI’s adoption grows more widespread and companies see increasing returns on their AI investments, the technology’s risks also become more apparent.1 Our recent global survey of more than 1,000 managers suggests that AI systems across industries are susceptible to failures, with nearly a quarter of respondents reporting that their organization has experienced an AI failure, ranging from mere lapses in technical performance to outcomes that put individuals and communities at risk. It is these latter harms that responsible AI (RAI) initiatives seek to address.

Meanwhile, lawmakers are developing the first generation of meaningful AI-specific legislation.2 For example, the European Union’s proposed AI Act would create a comprehensive scheme to govern the technology. And in the U.S., lawmakers in New York, California, and other states are working on AI-specific regulations to govern its use in employment and other high-risk contexts.3 In response to the heightened stakes around AI adoption and impending regulations, organizations worldwide are affirming the need for RAI, but many are falling short when it comes to operationalizing RAI in practice.

There are, however, exceptions. A number of organizations are bridging the gap between aspirations and reality by making a philosophical and material commitment to RAI, including investing the time and resources needed to create a comprehensive RAI program. We refer to them as RAI Leaders or Leaders. They appear to enjoy clear business benefits from RAI. Our research indicates that Leaders take a more strategic approach to RAI, led by corporate values and an expansive view of their responsibility toward a wide array of stakeholders, including society as a whole. For Leaders, prioritizing RAI is inherently aligned with their broader interest in leading responsible organizations.

This MIT Sloan Management Review and Boston Consulting Group report is based on our global survey, interviews with several C-level executives, and insights gathered from an international panel of more than 25 AI experts. (For more details on our methodology, including how the research team surveyed Africa and China, see “About the Research.”) It provides a high-level road map for organizations seeking to enhance their RAI efforts or become RAI Leaders. Though negotiating AI-related challenges and regulations can be daunting, the good news is that a focus on general corporate responsibility goes a long way toward achieving RAI maturity.

Introduction

Responsible AI has become a popular term in both business and the media. Many companies now have responsible AI officers and teams dedicated to ensuring that AI is developed and used appropriately. This emphasis reflects an increasingly common point of view that as AI gains influence over operations and how products work, companies need to address novel risks associated with this emerging technology.

However, leading companies are taking a more expansive approach: For them, RAI is about expanding their foundation of corporate responsibility. These companies are responsible businesses first: The values and principles that determine their approach to responsible conduct apply to their entire suite of technologies, systems, and processes. For these leading companies, RAI is less about a particular technology than the company itself.

H&M Group is a case in point. Linda Leopold, the company’s head of responsible AI and data, recognizes, “There is a close connection between our strategy and our strategy for responsible AI and our efforts to promote social and environmental sustainability.” One example of where these strategies align “is our ambition to use AI as a tool to reduce CO2 emissions,” she explains.

Nitzan Mekel-Bobrov, chief AI officer at eBay, sees an inherent connection between RAI and a broader view of corporate responsibility. He notes, “Many of the core ideas behind responsible AI, such as bias prevention, transparency, and fairness, are already aligned with the fundamental principles of corporate social responsibility, so it should already feel natural for an organization to tie in its AI efforts.”

Their views on RAI reflect a powerful theme that runs throughout our research this year: As organizations develop and mature their RAI programs, they come to see RAI as an organizational issue, not just a technological one. At the same time, many organizations have yet to make this transition.

The State of RAI: Aspirations Versus Reality

Without question, AI adoption is accelerating across organizations in all industries and sectors. An overwhelming majority of the companies surveyed for MIT SMR and BCG’s 2019 report on AI — 90% — had made investments in the technology.4 Our research suggests that organizations deploy AI to optimize internal business processes and improve external customer relations and products. Levi Strauss & Co. provides an example from the retail industry. “AI is starting to permeate the entirety of Levi Strauss & Co.,” observes Katia Walsh, the apparel company’s chief global strategy and AI officer. She explains that, far from playing a limited role in one area, the organization is implementing AI across various functional areas, enabling personalizing consumer experiences online and in stores, automating and optimizing internal processes, pricing and production, order fulfillment, and other initiatives. AI is being implemented horizontally across the enterprise to personalize customer search experiences, enhance internal efficiencies, predict demand for products, and more.

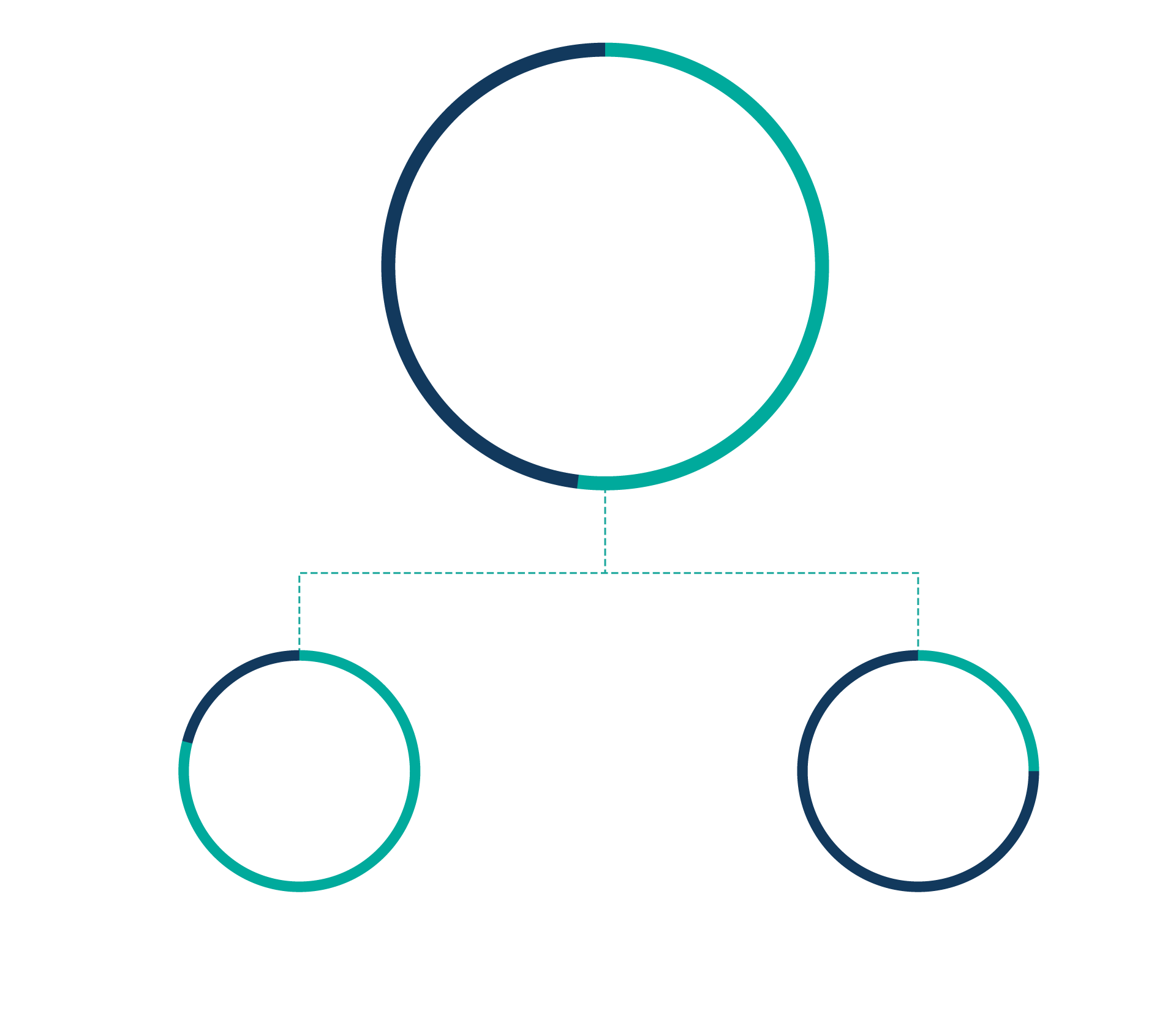

While corporate adoption of AI has been rapid and wide-ranging, the adoption of responsible AI across organizations worldwide has thus far been relatively limited. RAI is often seen as necessary to mitigate the technology’s risks — which encompass issues of safety, bias, fairness, and privacy, among others — yet it is by no means standard practice. Just over half of our respondents (52%) report that their organizations have an RAI program in place. Of those with an RAI program, a majority (79%) report that the program’s implementation is limited in scale and/or in scope. (See Figure 1.)

Figure 1

RAI Programs Are Not Yet Widespread

Just over half of the organizations we surveyed report having a responsible AI program.

Notably, 42% of our respondents say that AI is a top strategic priority for their organization, but even among those respondents, only 19% affirm that their organization has a fully implemented RAI program. In other words, responsible AI initiatives often lag behind strategic AI priorities. (See Figure 2.)

Figure 2

Failure to Prioritize RAI

Less than half of the respondents we surveyed view AI as a top strategic priority, and less than one-fifth have a fully implemented RAI program.

One factor that could be contributing to RAI’s limited implementation is confusion over the term itself. Given that RAI is a relatively nascent field, it is hardly surprising that there is a lack of consensus on the meaning of responsible AI. Only 36% of respondents believe the term is used consistently throughout their organization. Even enterprises that have implemented RAI programs find that the term is used inconsistently. Kathy Baxter, principal architect of ethical AI practice at Salesforce, notes that there has been discussion at the cloud-based software company over whether to use the term responsible AI or ethical AI and, indeed, over whether the two are interchangeable. Similarly, at H&M Group, Leopold agrees that the terms ethical, trustworthy, and responsible in connection with AI are “used very much interchangeably.” To be consistent, and avoid confusion, she and her team decided to use responsible as an umbrella term, where ethics is one key component.

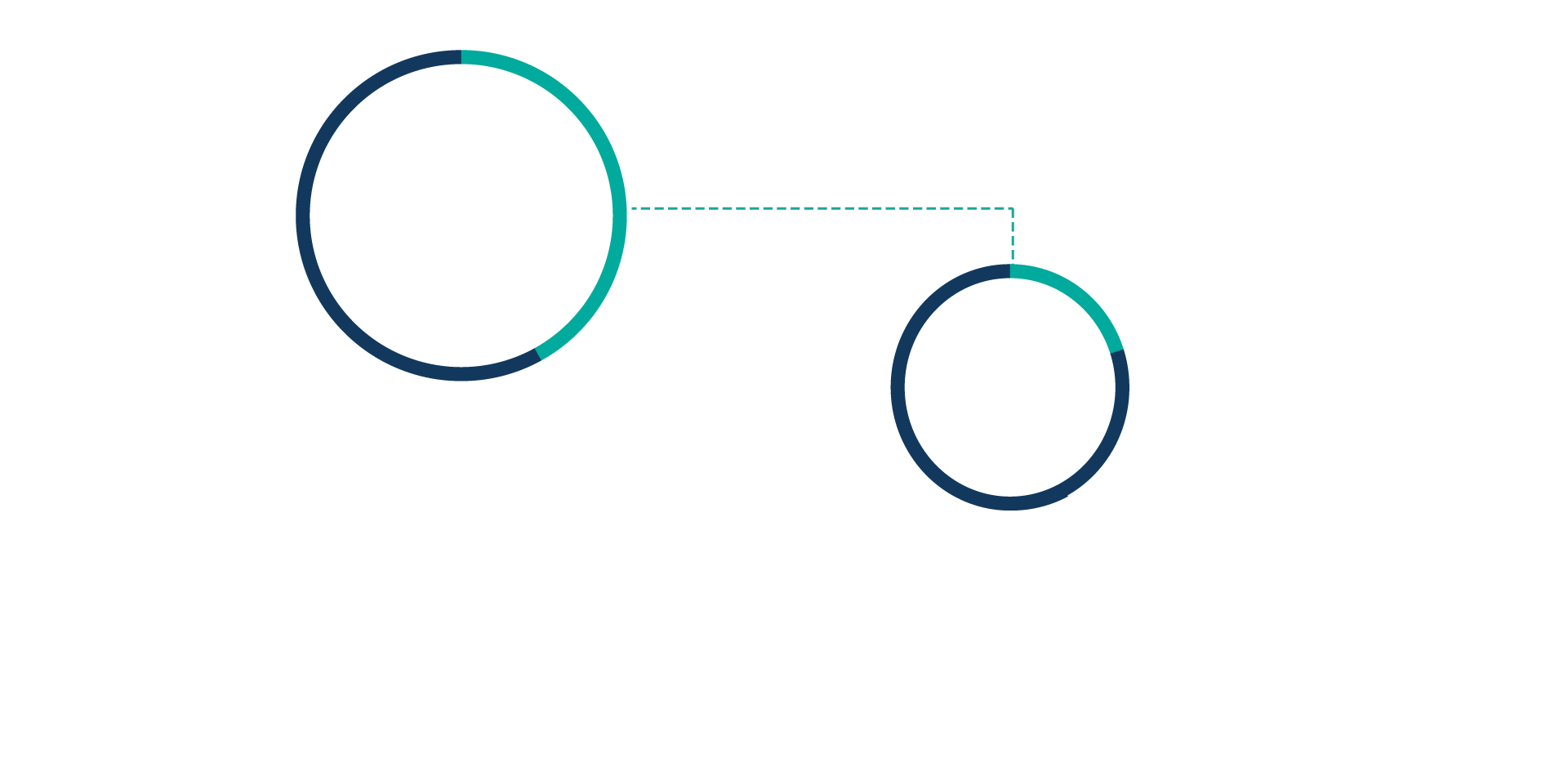

Other factors that contribute to the limited implementation of RAI have less to do with the technical complexities of AI than with more general organizational challenges. When respondents were asked which factors were preventing their organizations from starting, sustaining, or scaling RAI initiatives, the most common factors were shortcomings related to expertise and talent, training or knowledge among staff members, senior leadership prioritization, funding, and awareness. (See Figure 3.)

Figure 3

Organizational Constraints Limit RAI Efforts

General organizational challenges limit organizations’ ability to implement responsible AI initiatives.

Given the rapid spread of AI technology and growing awareness of its risks, most organizations recognize the importance of RAI and want to prioritize it. The vast majority of respondents (84%) believe that RAI should be part of the top management agenda. Several of our RAI panel members share that sentiment.5 Paula Goldman, chief ethical and humane use officer at Salesforce, forcefully makes the point, declaring, “As we navigate increasing complexity and the unknowns of an AI-powered future, establishing a clear ethical framework isn’t optional. It’s vital for its future.” Riyanka Roy Choudhury, a CodeX fellow at Stanford Law School’s Computational Law Center, concurs, describing RAI as “an economic and social imperative.” She adds, “It’s vital that we are able to explain the decisions we use AI to make, so it is important for companies to include responsible AI as a part of the top management agenda.” Our research supports the prioritization of RAI as a business imperative.

Despite widespread agreement regarding the importance of RAI, however, the reality is that most organizations have yet to translate their beliefs into action. Furthermore, even organizations that have implemented RAI to some degree have, in most cases, done so to only a limited extent. Of the 84% of respondents who believe that RAI should be a top management priority, only 56% say that it is in fact a top priority. And of those, only 25% report that their organizations have a fully mature RAI program in place. (See Figure 4.)

Figure 4

Not Walking the Talk

While a majority of respondents believe responsible AI should be a top management priority, only one-quarter have a fully mature RAI program in place.

RAI Leaders Bridge the Gap

A small cohort of organizations, representing 16% of our survey respondents, has managed to bridge the gap between aspirations and reality by taking a more strategic approach to RAI. These RAI Leaders have distinct characteristics compared with the remainder of the survey population (84%), whom we characterize as Non-Leaders.6 Specifically, they are organizations whose management prioritizes RAI, include a wide array of participants in RAI implementations, and have an expansive view of their stakeholders with respect to RAI. Accordingly, three-quarters (74%) of Leaders report that RAI is in fact part of the organization’s top management agenda, as opposed to just 46% of Non-Leaders. This prioritization is reflected in the commitment of 77% of Leaders to invest material resources in their RAI efforts, as opposed to just 39% of Non-Leaders.

Steven Vosloo, digital policy specialist in UNICEF’s Office of Global Insight and Policy, attests to the importance of leadership support for RAI practices. “It is not enough to expect product managers and software developers to make difficult decisions around the responsible design of AI systems when they are under constant pressure to deliver on corporate metrics,” he contends. “They need a clear message from top management on where the company’s priorities lie and that they have support to implement AI responsibly.” Without leadership support, practitioners may lack the necessary incentives, time, and resources to prioritize RAI.

In addition to investing in their RAI efforts, Leaders also include a broader range of participants in those efforts. Leaders include 5.8 roles in their RAI efforts, on average, as opposed to only 3.9 roles for Non-Leaders. Notably, this involvement is tilted toward senior positions. Leaders engage 59% more C-level roles in their RAI initiatives than Non-Leaders, and nearly half (47%) of Leaders involve the CEO in their RAI initiatives, more than double the percentage of Non-Leaders (23%). (See Figure 5.)

Figure 5

RAI Implementation: Leaders Versus Non-Leaders

RAI Leaders show maturity across a variety of dimensions.

Leaders believe that RAI should engage a broad range of participants beyond the organization’s boundaries, even viewing society as a whole as a key stakeholder. Significantly, a strong majority of Leaders (73%) see their RAI efforts as part of their broader corporate social responsibility (CSR) efforts. Brian Yutko, Boeing’s vice president and chief engineer for sustainability and future mobility, embraces this outlook: “There’s nothing that we can do in this industry that doesn’t come with safety as one of the driving requirements. So, it’s hard for me to extract ‘responsible AI’ from the notion of safety, because that’s just simply what we do.” H&M’s Leopold suggests that RAI is connected to CSR, "but it needs to be treated as a separate topic with its own specific challenges and goals. It’s not entirely overlapping and connected" with CSR. Non-Leaders are more likely to define RAI in relation to their business bottom line or internal stakeholders, with only 35% connecting RAI with CSR efforts.7

As organizations mature their RAI initiatives, they become even more interested in aligning their AI use and development with their values and broader social responsibility.

Our research indicates that as organizations mature their RAI initiatives, they become even more interested in aligning their AI use and development with their values and broader social responsibility, and less concerned with limiting risk and realizing business benefits.

It is also significant that Leaders are far more likely than Non-Leaders to disagree that RAI is a “check the box” exercise (61% versus 44%, respectively). These divergent outlooks reflect different outcomes. Our survey results show that organizations with a box-checking approach to RAI are more likely to experience AI failures than Leader organizations.

RAI Leaders Realize Clear Business Benefits

As we have noted, RAI Leaders can realize measurable business benefits from their RAI efforts even if they are not primarily motivated by the promise of such benefits. Benefits include better products and services, improved brand differentiation, accelerated innovation, enhanced recruiting and retention, increased customer loyalty, and improved long-term profitability, as well as a better sense of preparedness for emerging regulations.

Overall, 41% of Leaders affirm that they are already realizing business benefits from their RAI efforts, compared with only 14% of Non-Leaders. Moreover, the benefits of AI maturity are amplified when organizations have a robust RAI program in place.8 Thirty percent of RAI Leaders see business benefits from their RAI programs even with immature AI efforts, compared with just 11% of Non-Leaders. Forty-nine percent of Leaders see business benefits from their RAI programs with mature AI efforts, compared with 23% of Non-Leaders. Whether their AI program is mature or immature, Leaders stand to reap more business benefits with RAI.

In addition to increasing business benefits, mature RAI programs also reduce the risks associated with AI itself. With growing AI maturity, and as more AI applications are deployed, the risk of AI failures increases. Increasing RAI maturity ahead of AI maturity significantly reduces the risks associated with scaling AI efforts over time and helps organizations identify AI lapses. Conversely, organizations that mature their AI programs before adopting RAI see more AI failures.

In terms of specific business benefits, half of Leaders report better products and services as a result of their RAI efforts, whereas only 19% of Non-Leaders do. Almost as many Leaders (48%) say that their RAI efforts have resulted in enhanced brand differentiation, while only 14% of Non-Leaders have realized such benefits.

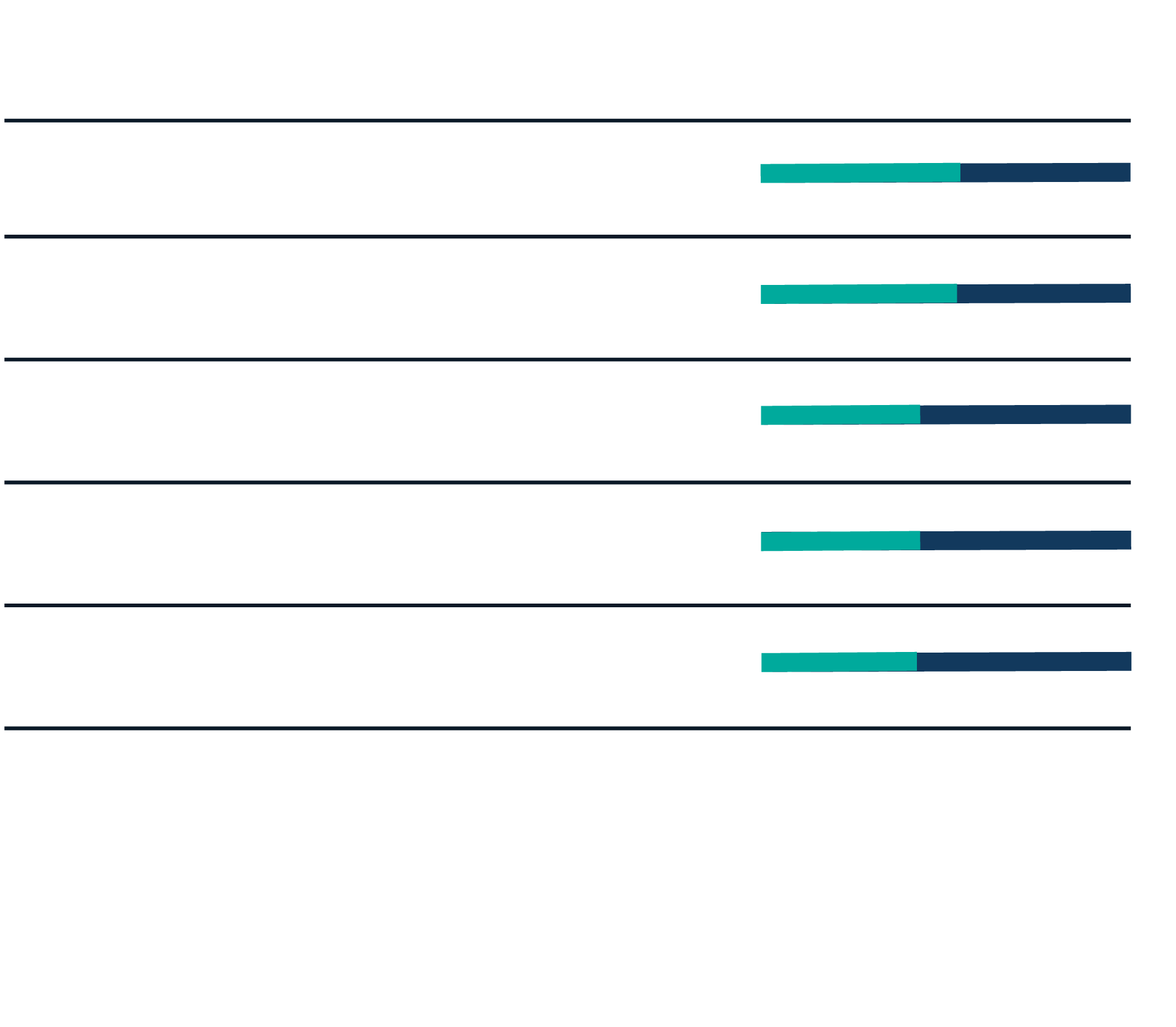

Furthermore, contrary to popular perception, 43% of Leaders report accelerated innovation as a result of their RAI efforts, compared with only 17% of Non-Leaders. (See Figure 6.) Indeed, the overwhelming majority of our AI panel sees RAI as having a positive impact on innovation, with many citing the fact that RAI can help curtail the kinds of negative effects of AI that can hinder its development or adoption.9

Figure 6

RAI Leaders Realize More Business Benefits

Leaders are nearly three times as likely to realize business benefits from their organizations’ RAI initiatives.

Vipin Gopal, chief data and analytics officer at Eli Lilly, believes that rather than stifling innovation, “responsible AI enables responsible innovation.” He explains: “It would be hard to make the argument that a biased and unfair AI algorithm powers better innovation compared with the alternative. Similar observations can be made with other dimensions of responsible AI, such as security and reliability. In short, responsible AI is a key enabler to ensure that AI-related innovation is meaningful and something that positively benefits society at large.”

Finally, with AI regulations on the horizon, Leaders also experience better preparedness. Most organizations say that they are ill-equipped to face the forthcoming regulatory landscape. But our survey results indicate that those with a mature RAI program in place feel more prepared. A majority of Leaders (51%) feel ready to meet the requirements of emerging AI regulations, compared with less than a third (30%) of organizations with nascent RAI programs.

Recommendations for Aspiring RAI Leaders

Clearly, there are compelling reasons for organizations to transform their own RAI aspirations into reality, including general corporate responsibility, the promise of a range of business benefits, and potentially better preparedness for new regulatory frameworks. How, then, should businesses begin or accelerate this process? These recommendations, inspired by lessons from current RAI leaders, will help organizations scale or mature their own RAI programs.

Ditch the “check the box” mindset. In the face of impending AI regulations and increasing AI lapses, RAI may help organizations feel more prepared. But a mature RAI program is not driven solely by regulatory compliance or risk reduction. Consider how RAI aligns with or helps to express your organizational culture, values, and broader CSR efforts.

Zoom out. Take a more expansive view of your internal and external stakeholders when it comes to your own use or adoption of AI, as well as your AI offerings, including by assessing the impact of your business on society as a whole. Consider connecting your RAI program with your CSR efforts if those are well established within the organization. There are often natural overlaps and instrumental reasons for linking the two.

Start early. Launch your RAI efforts as soon as possible to address common hurdles, including a lack of relevant expertise or training. It can take time — our survey shows three years on average — for organizations to begin realizing business benefits from RAI. Even though it might feel like a long process, we are still early in the evolution of RAI implementation, so your organization has an opportunity to be a powerful leader in your specific industry or geography.

Walk the talk. Adequately invest in every aspect of your RAI program, including budget, talent, expertise, and other human and nonhuman resources. Ensure that RAI education, awareness, and training programs are sufficiently funded and supported. Engage and include a wide variety of people and roles in your efforts, including at the highest levels of the organization.

Conclusion

We are at a time when AI failures are beginning to multiply and the first AI-related regulations are coming online. While both developments lend urgency to the efforts to implement responsible AI programs, we have seen that companies leading the way on RAI are not driven primarily by risks, regulations, or other operational concerns. Rather, our research suggests that Leaders take a strategic view of RAI, emphasizing their organizations’ external stakeholders, broader long-term goals and values, leadership priorities, and social responsibility.

Even though there are unique properties of AI that require an organization to articulate specific cultural attitudes, priorities, and practices, similar strategic considerations might influence how an organization approaches the development or use of blockchain, quantum computing, or any other technology, for that matter.

Given the high stakes surrounding AI, and the clear business benefits stemming from RAI, organizations should consider how to mature their RAI efforts and even seek to become Leaders. Philip Dawson, AI policy lead at the Schwartz Reisman Institute for Technology and Society, warns of liabilities for corporations that neglect to approach this issue strategically. “Top management seeking to realize the long-term opportunity of artificial intelligence for their organizations will benefit from a holistic corporate strategy under its direct and regular supervision,” he asserts. “Failure to do so will result in a patchwork of initiatives and expenditures, longer time to production, damages that could have been prevented, reputational damages, and, ultimately, opportunity costs in an increasingly competitive marketplace that views responsible AI as both a critical enabler and an expression of corporate values.”

On the flip side of those liabilities, of course, are the benefits that we have seen accrue to Leaders that adopt a more strategic view. Leaders go beyond talking the talk to walking the walk, bridging the gap between aspirations and reality. They demonstrate that responsible AI actually has less to do with AI than with organizational culture, priorities, and practices — how the organization views itself in relation to internal and external stakeholders, including society as a whole.

In short, RAI is not just about being more responsible for a special technology. RAI Leaders see RAI as integrally connected to a broader set of corporate objectives, and to being a responsible corporate citizen. If you want to be an RAI Leader, focus on being a responsible company.

Appendix: Responsible AI Adoption in Africa and China

In order to better understand how industry stakeholders in Africa and China approach responsible AI, our research team conducted separate surveys in those two key geographies. The Africa survey, conducted in English, returned 100 responses, and the China survey, localized in Mandarin Chinese, returned 99. African respondents represented organizations grossing at least $100 million in annual revenues, and Chinese respondents represented organizations grossing at least $500 million.

A majority of respondents in Africa (74%) agree that responsible AI is a top management agenda item in their organizations. Sixty-nine percent agree that their organizations are prepared to address emerging AI-related requirements and regulations. The highest percentage of African respondents (55%) report that their organizations’ RAI efforts have been underway for a year or less (with 45% at six to 12 months, and 10% at less than six months).

In China, 63% of respondents agree that responsible AI is a top management agenda item, and the same percentage agree that their organizations are prepared to address requirements and regulations. Based on our survey data, China appears to have longer-standing efforts around RAI, with respondents reporting that their organizations have focused on RAI for one to three years (39%) or more than five years (20%).

Respondents in both geographies have realized clear business benefits from their RAI efforts. A majority of respondents — 55% in Africa and 51% in China — cite better products and services as a top benefit. A significant minority have benefited from increased customer retention — 38% in Africa and 34% in China. In Africa, 38% of respondents also cite improved longer-term profitability, while 40% of respondents in China say they have experienced accelerated innovation as a result of RAI. (See Figure 7.)

Figure 7

How Africa and China Focus on Responsible AI

Respondents from China and Africa share the business benefits their organizations gain from RAI efforts.

About the Research

In the spring of 2022, MIT Sloan Management Review and Boston Consulting Group fielded a global executive survey to learn the degree to which organizations are addressing responsible AI. We focused our analysis on 1,093 respondents representing organizations reporting at least $100 million in annual revenues. These respondents represented companies in 22 industries and 96 countries. The team separately fielded the survey in Africa, as well as a localized version in China, to yield 100 and 99 responses from those geographies, respectively.

We defined responsible AI as “a framework with principles, policies, tools, and processes to ensure that AI systems are developed and operated in the service of good for individuals and society while still achieving transformative business impact.”

To quantify what it means to be a responsible AI Leader, the research team conducted a cluster analysis on three numerically encoded survey questions: “What does your organization consider part of its responsible AI program? (Select all that apply.)”; “To what extent are the policies, processes, and/or approaches indicated in the previous question implemented and adopted across your organization?”; and “Which of the following considerations do you personally regard as part of responsible AI? (Select all that apply.).” The first and third questions were first recategorized into six options each to ensure equal weighting of both aspects. The team then used an unsupervised machine learning algorithm (K-means clustering) to identify naturally occurring clusters based on the scale and scope of the organization’s RAI implementation. The K-means algorithm required specification of the number of clusters (K), which were verified through exploratory data analysis of the survey data and direct visualization of the clusters via UMAP. We then defined an RAI Leader as the most mature of three maturity clusters identified through this analysis based on the scale and scope of the organization’s RAI implementation. Scale is defined as the degree to which RAI efforts are deployed across the enterprise (e.g., ad hoc, partial, enterprisewide). Scope includes the elements that are part of the RAI program (e.g., principles, policies, governance) and the dimensions covered by the RAI program (e.g., fairness, safety, environmental impact). Leaders were the most mature in terms of both scale and scope.

Finally, the research team assembled a panel of 26 RAI thought leaders from industry and academia, who were polled on key questions to inform this research multiple times through its cycle. We conducted deeper-dive interviews with four of those panelists.