Is Your Organization Investing Enough in Responsible AI? ‘Probably Not,’ Says Our Data

Topics

Responsible AI

In collaboration with

BCGFor the second year in a row, MIT Sloan Management Review and Boston Consulting Group have assembled an international panel of AI experts to help us understand how responsible artificial intelligence (RAI) is being implemented across organizations worldwide. For our final question in this year’s research cycle, we asked our academic and practitioner panelists to respond to this provocation: As the business community becomes more aware of AI’s risks, companies are making adequate investments in RAI.

While their reasons vary, most panelists recognize that RAI investments are falling short of what’s needed: Eleven out of 13 were reluctant to agree that organizations’ investments in responsible AI are “adequate.” The panelists largely affirmed findings from our 2023 RAI global survey, in which less than half of respondents said they believe their company is prepared to make adequate investments in RAI. This is a pressing leadership challenge for companies that are prioritizing AI and must manage AI-related risks.

The need to expand investments in RAI marks a significant shift now that “AI-related risks are impossible to ignore,” observes Linda Leopold, head of responsible AI and data at H&M Group. While making adequate investments in RAI used to be an important but not particularly urgent issue, Leopold suggests that recent advances in generative AI and the proliferation of third-party tools have made the urgency of such investments clear.

Making adequate investments in RAI is complicated by measurement challenges. There are no industry standards that offer guidance, so how do you know when or if your investments are sufficient? What’s more, rational executives can have different opinions about what constitutes an adequate investment. What’s clear is that epistemic questions should not be a barrier to action, especially when AI risks pose real business threats.

The Panelists Respond

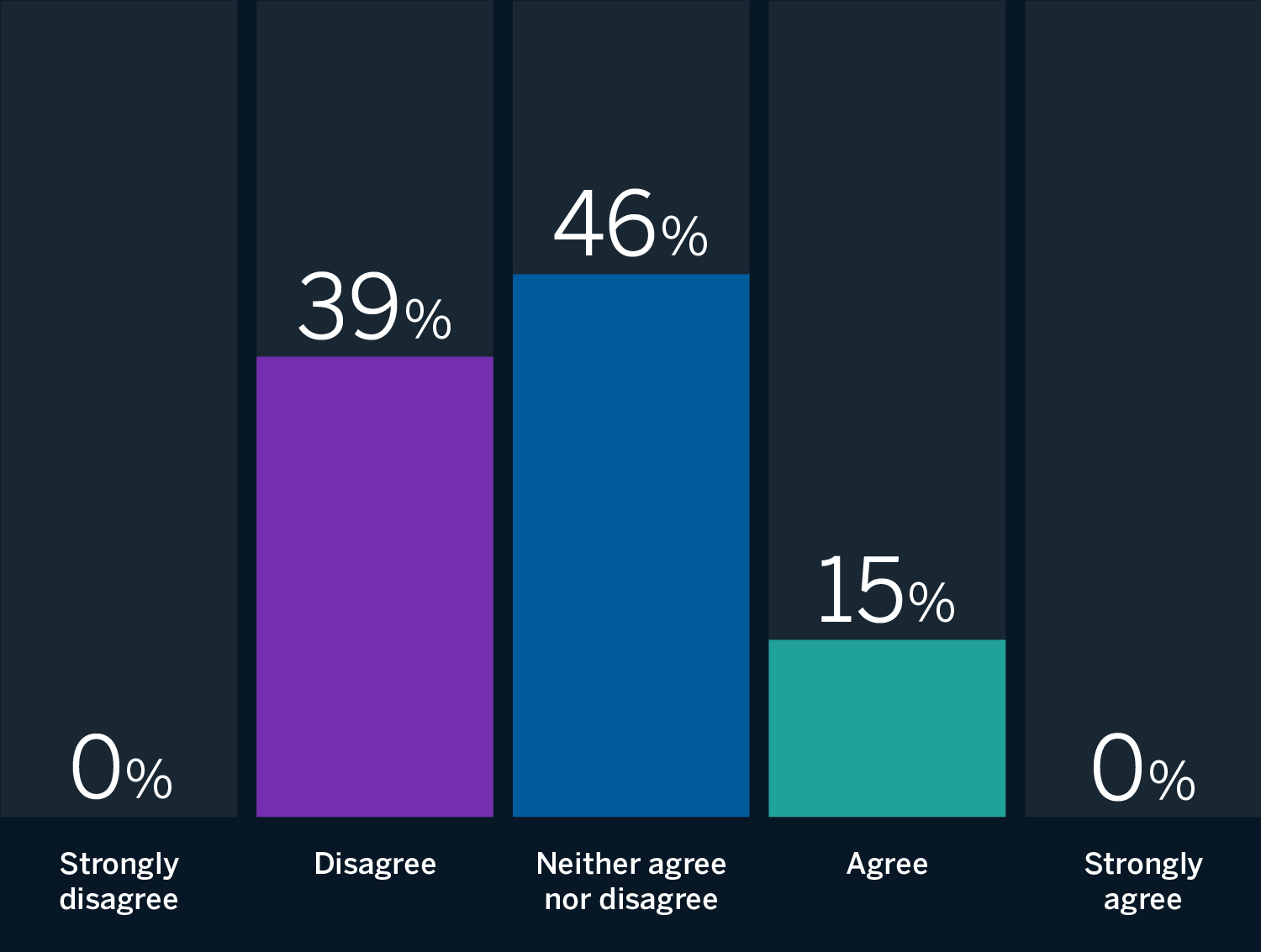

It is unclear if organizations are making appropriate investments in RAI.

Most panelists are reluctant to agree that organizations are making “adequate” investments in RAI.

Source: Responsible AI panel of 13 experts in artificial intelligence strategy.

Responses from the 2023 Global Executive Survey

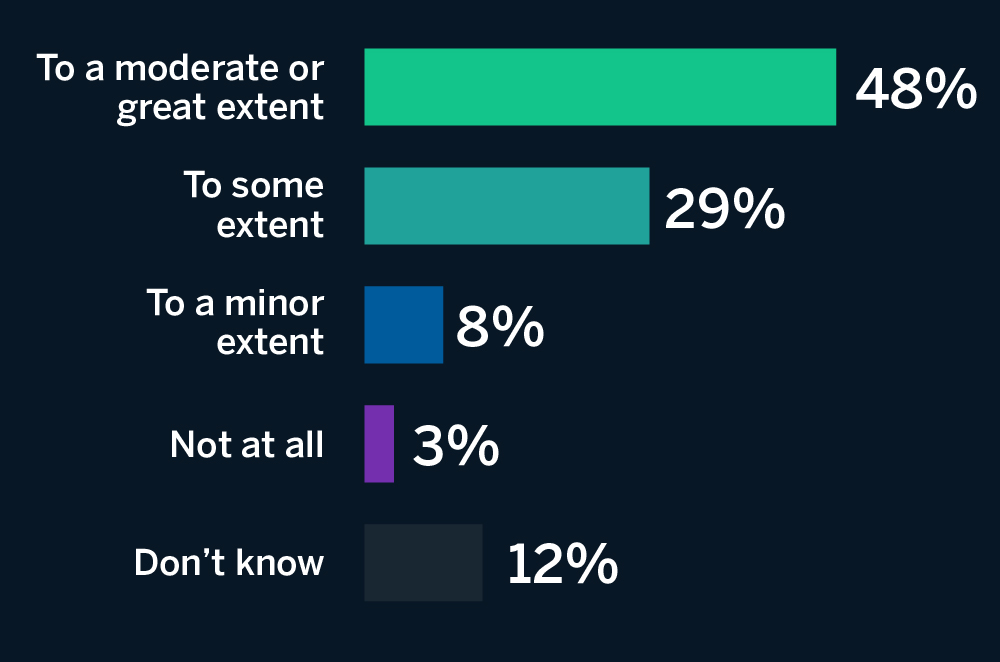

“To what extent do you believe that your organization is prepared to invest (reduce revenue, increase costs) to comply with responsible AI initiatives?”

Source: Survey data of 1,240 respondents representing organizations reporting at least $100 million in annual revenues. These respondents represented companies in 59 industries and 87 countries. The survey fielded in China was localized.

Companies Are Investing in RAI, but Not Enough

Panelists offered several reasons why companies aren’t making adequate investments in responsible AI. Several panelists suggested that profit motives are a factor. Tshilidzi Marwala, rector of the United Nations University and U.N. undersecretary general, contends that only “20% of the companies aware of AI risks are investing in RAI programs. The rest are more concerned about how they can use AI to maximize their profits.” Simon Chesterman, the David Marshall Professor and vice provost at the National University of Singapore, adds, “The gold rush around generative AI has led to a downsizing of safety and security teams in tech companies, and a shortened path to market for new products. … Fear of missing out is triumphing — in many organizations, if not all — over risk management.”

Another reason behind underinvestment is that greater use of AI throughout an enterprise expands the scope of RAI and increases required investments. As Philip Dawson, head of AI policy at Armilla AI, observes, “Investment in AI capabilities continues to vastly outstrip investment in AI safety and tools to operationalize AI risk management. Enterprises are playing catch-up in an uphill battle that is only getting steeper.” Leopold adds that generative AI and an increasing number of possible applications “significantly increases the size of the audience that responsible AI programs have to reach.”

Neither agree nor disagree

A third reason is that growing awareness of AI risks does not always translate into accurate assessments of those risks. Oarabile Mudongo, a policy specialist at the African Observatory on Responsible AI, suggests that some companies might not be investing enough in their RAI programs because they “underestimate the extent of AI risks.”

How Do You Know Whether You’re Investing Enough?

Other panelists pointed out that although making adequate investments in RAI is important, it’s hard to gauge whether the investment is large enough or effective. How should companies calculate a return on RAI investments? What counts as an “adequate” return? What even counts as an investment? David R. Hardoon, CEO of Aboitiz Data Innovation and chief data and AI officer of Union Bank of the Philippines, asks, “How can the investments in RAI be broadly, and generally, considered adequate if (1) all companies are not subjected to the same levels of standards, (2) we have yet to agree on what is ‘sufficient’ or ‘acceptable,’ or (3) we don’t have a mechanism to verify third parties?”

Triveni Gandhi, responsible AI lead at Dataiku, agrees: “Among those companies that have taken the time to invest in RAI programs, there is wide variation in how these programs are actually designed and implemented. The lack of cohesive or clear expectations on how to implement or operationalize RAI values makes it difficult for organizations to start investing efficiently.”

“It is difficult to determine the extent to which companies are making adequate investments in responsible AI programs, as there is a large spectrum of awareness and relevance,” asserts Giuseppe Manai, cofounder, chief operating officer, and chief scientist at Stemly. Aisha Naseer, director of research at Huawei Technologies (UK), adds, “It is not evident whether these investments are adequate or enough, as these range from trivial to huge funds being allocated for the control of AI and associated risk mitigation.”

Neither agree nor disagree

“Among those companies that have taken the time to invest in RAI programs, there is wide variation in how these programs are actually designed and implemented. The lack of cohesive or clear expectations on how to implement or operationalize RAI values makes it difficult for organizations to start investing efficiently.”

Recommendations

For organizations seeking to adequately invest in RAI, we recommend the following:

1. Build leadership awareness of AI risks. Leaders need to be aware that using AI is a double-edged sword that introduces risks as well as benefits. Focusing on investments that increase the benefits of AI without a commensurate focus on managing its risks undermines value creation in two ways. One is that without appropriate guardrails, the benefits from AI might be underwhelming: Our research has found that innovation with AI increases when RAI practices are in place. Second, risks from AI are proliferating as quickly as the tools they are embedded in. A robust awareness of AI’s risks and benefits supports leadership efforts to manage risks and grow the company. Building management awareness can take many forms, including executive education, discussion forums, and RAI programs that have a variety of stakeholders with different types of exposure to (and experience with) AI risks.

2. Accept that investing in RAI will be an ongoing process. As AI development and use increase — with no foreseeable end — RAI practices and implementations need to adapt as well. That requires ongoing RAI investment to continually evolve an RAI program. With the regulatory landscape poised for change in the European Union, the U.S., and Asia, leaders will face growing pressure to evolve their AI-focused risk management systems. Waiting to invest (or halting investment) in these systems — technologically, culturally, and financially — can undermine business growth. When new regulations and industry standards take effect, rapidly overhauling processes to meet compliance requirements can interfere with AI-related innovation. It’s better to co-evolve RAI programs with AI development so they complement each other rather than create this unnecessary interference. As companies scale their AI investments, so, too, will their RAI investments need to grow. But those companies that are already behind will need to move quickly and invest aggressively in RAI to avoid always lagging behind.

3. Develop RAI investment metrics. Investing in RAI is complex — and what does a return on such an investment even look like? Much like with cybersecurity investments, the best RAI returns might be the sound of silence when AI-related issues are discovered before they create damage to brand reputation, regulatory issues, and increased costs. That said, silence should not be mistaken for adequate investment. As our survey results show, companies making minimal investment might not find issues simply because they aren’t looking for them. Leaders should align on what constitutes an RAI investment, the appropriate metrics to evaluate these investments, and who will decide on investment levels. Metrics that gauge RAI program success are necessary to support RAI programs over time, but they can be a lagging indicator. Metrics should also evaluate forward-looking dimensions such as leadership commitment, program adoption, training and workforce needs, and culture change. Leaders must also determine how RAI funding is related to funding for AI projects. Should every large AI project have a budget set aside for RAI? Or should RAI funding be independent of specific projects or some combination of project-based (decentralized) and institutional (centralized) investments?

“Investment in AI capabilities continues to vastly outstrip investment in AI safety and tools to operationalize AI risk management. Enterprises are playing catch-up in an uphill battle that is only getting steeper.”