Managing RAI Requires a Central Team

Topics

Responsible AI

In collaboration with

BCGMIT Sloan Management Review and Boston Consulting Group have assembled an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide.

As companies increase their use of internally developed and third-party AI tools, including large language models, responsibly managing AI will become a more complicated, broader, and higher-stakes set of activities. Who, then, should manage RAI efforts? Should RAI management be centralized on a single team or be decentralized, with accountability distributed across the enterprise? We wanted to understand how our expert panelists would address these questions, so we asked them to react to the following provocation: The management of RAI should be centralized in a specific function (versus decentralized across multiple functions and business units).

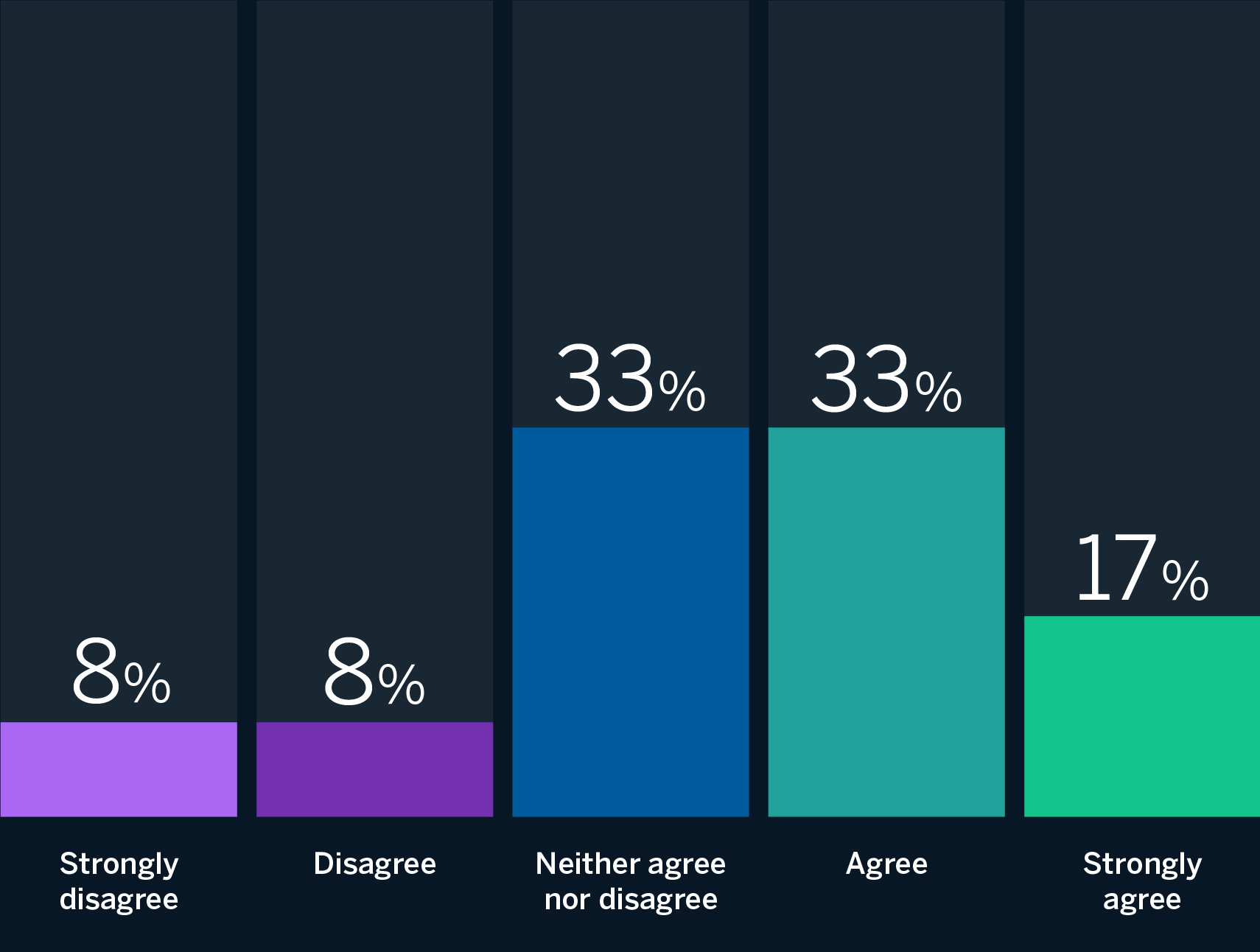

While half of the panelists agreed or strongly agreed that RAI management should be centralized, the vast majority of panelists — 11 out of 12 — indicated in their responses that managing RAI should be centralized and decentralized. Proponents of this hybrid view shared these common perspectives:

- A centralized, cross-functional team is necessary to set RAI standards and practices and to have accountability for RAI efforts across the enterprise. A centralized team is needed to govern RAI practices.

- Management of RAI should also happen at the project level and across functions. It is the responsibility of everyone involved with AI implementations to embed, and abide by, RAI standards and practices. A decentralized approach is necessary to ensure that the organization, broadly, is responsible for RAI.

The Panelists Respond

Managing RAI should be centralized and decentralized.

While 50% of our panelists explicitly agree or strongly agree that RAI management should be centralized, 92% mentioned centralized management in their open-text response to this prompt.

Source: Responsible AI panel of 12 experts in artificial intelligence strategy. Percentages may not total 100% due to rounding.

The Case for Centralizing Management of RAI

A primary function of RAI efforts is to govern the use of AI across the enterprise. As a governance mechanism, RAI helps establish and communicate standards, ensures that standards are followed, cultivates a culture that embraces the responsible use of AI, and provides expertise to support AI product teams.

Strongly agree

To deliver on these objectives, panelists encourage the creation of cross-functional or centralized teams. Linda Leopold, head of responsible AI at H&M Group, asserts that “someone needs to be in charge of the RAI strategy and vision — providing topic expertise, leadership, and coordination. This should preferably be handled by a specific, centralized team.” According to Riyanka Roy Choudhury, a CodeX fellow at Stanford Law School, “Leadership should assess, analyze, and create an integrated organizational structure to manage RAI across multiple functions and business units.”

Panelists were consistent in describing the purpose of a centralized team. Triveni Gandhi, responsible AI lead at Dataiku, says its function is to provide guidance and “clear accountability structures.” Giuseppe Manai, cofounder and chief operating officer of Stemly, adds, “A designated [RAI] unit sets ethical guidelines and standards for AI implementations across the organization. This unit acts as a focal point for RAI initiatives, equipped with specialized expertise in AI ethics and compliance. Their main task is to develop ethical frameworks that all other units and business functions must adhere to when implementing AI solutions.”

Intel has a cross-functional RAI council that conducts a rigorous review throughout the life cycle of an AI project, assessing and mitigating ethical risks. Elizabeth Anne Watkins, a research scientist at Intel Labs, notes that the council’s members “provide training, feedback, and support to the development teams and business units to ensure consistency and compliance with our principles across Intel.”

Cultivating an RAI culture often means cultivating RAI ambassadors, advocates, and influencers from across the enterprise. “To foster durable RAI cultures,” Watkins says, “it’s also helpful to complement this central team with a strong network of champions who can advocate for RAI principles within teams and business units.” Leopold concurs: “By having RAI champions or similar across functions or business units, the message … is that it is everyone’s responsibility to be responsible.” And, as Choudhury and UNICEF digital policy specialist Steven Vosloo observe, a centralized RAI function minimizes coordination costs between AI implementations and function-level RAI initiatives.

Strongly agree

“The responsibility of the central function is to set the change (RAI) in motion; make the organization aware; support business units in appointing RAI champions and ensuring that they are properly trained; make sure that the organizational AI governance model is understood and followed by the business units; liaise with other relevant areas, such as ESG, privacy, security, IT, legal, and AI; set up communities of practice; support the AI ethics committee; etc.”

The Case for Hybrid Management: Centralized Accountability, Distributed Responsibility

Even those panelists who responded in the negative shared views that were consistent with having a central team that has ultimate accountability for RAI. Vosloo’s response reflects the view of several panelists: “While there should be a dedicated function that is ultimately accountable for responsible AI, the practice of RAI is a whole-of-organization undertaking. A hybrid approach is thus ideal: a central function, made up of a diverse range of stakeholders in the organization, that relies on responsible practices across multiple functions and business units.”

Business unit and functional leaders who manage AI implementations are de facto managers of RAI implementations. As professor Simon Chesterman of the National University of Singapore says, “Strategic direction and leadership [for RAI] may reside in the C-suite, but operationalizing RAI will depend on those deploying AI solutions to ensure appropriate levels of human control and transparency so that true responsibility is even possible.” David R. Hardoon, CEO of Aboitiz Data Innovation and chief data and AI officer of Union Bank of the Philippines, contends that “the implementation-operationalization of governance needs to be decentralized and the responsibility of each specific function.”

Neither agree nor disagree

“It is often the case that different functions within an organization handle different areas of RAI — between engineering, security, product, legal, and so on. This is not only important but necessary. However, it is unhelpful if these functions operate completely independently. That is why having an oversight and governance function is critical — to provide a holistic perspective across these concurrent efforts.”

As with any governance mechanism, exerting too much control can have a chilling or disruptive effect on efforts to innovate with or implement AI. Belona Sonna, manager of ethics and gender issues for AfroLeadership, observes that a central governance mechanism needs to accommodate project complexity or else it will run afoul of broader goals.

Strongly disagree

“Although centralized management of responsible AI can guarantee that all projects follow the same circuit of control, this can quickly become a disadvantage if the project under examination is complex. Moreover, centralized management runs counter to the vision of responsible AI development, which would preferably involve all players in the development chain. This is why, in my opinion, decentralized management makes it possible to distribute the roles of each unit according to their expertise, to ensure not only positive interaction but also the involvement of all.”

Recommendations

For organizations seeking to establish an effective RAI management structure, we recommend the following:

- Establish a central, cross-functional team that has accountability for RAI efforts across the organization. It is important, however, to define what constitutes accountability for this team: What are the roles and responsibilities of this group? What does successful accountability look like? What measures and metrics can be used to evaluate success? Clearly establishing the role and decision rights of the central team is critical; otherwise, it will have the responsibility but none of the authority needed to be successful. One caution: Defining the central team’s accountability broadly or narrowly invites trade-offs. Defining RAI broadly — say, if it covers any use of AI anywhere in the enterprise — will introduce scalability challenges for the central team, creating additional organizational bottlenecks and risks. Defining accountability narrowly — say, only for recognized AI projects — can reduce the organization’s ability to identify and mitigate risks from other uses of AI.

- Ensure that centralized teams have a mix of strategic leaders and technical experts from across domains (such as AI development, risk, legal, and infosec). Their combined expertise, networks, and influence will strengthen efforts to establish and communicate standards, cultivate RAI champions, and support a broader culture of responsible AI development and use. Furthermore, a team that includes a mix of leaders and experts can better address the inherent nature of AI implementations, which embody both organizational and technical risks.

- Create feedback loops so that RAI governance is not a top-down process. Front-line managers can be a critical source of information about what is and is not working with RAI-related activities. No RAI program can be static. Constant iteration and improvement is needed to ensure a program’s ongoing effectiveness in light of organizational change, technology development, and shared lessons across the broader RAI community.

“RAI is governance, and the management of governance needs to be centralized in order to achieve its intended effectiveness of guidance and oversight. Furthermore, a centralized setup enables standardization, efficiencies of scale and scope, and lower coordination costs. The implementation-operationalization of governance needs to be decentralized and the responsibility of each specific function.”