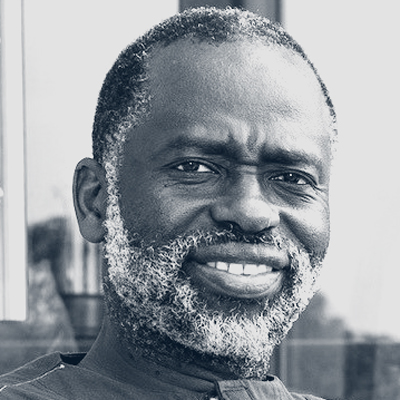

Professor Tshilidzi Marwala is the rector of the United Nations University (UNU) and a United Nations undersecretary general. Previously, he served as president (vice chancellor of the University of Johannesburg. He has published more than 25 books and over 300 papers on AI and holds four international patents. He is a fellow of The World Academy of Sciences (TWAS), the American Academy of Arts and Sciences, the Chinese Academy of Sciences, and the African Academy of Sciences. Professor Marwala was a trustee of the Nelson Mandela Foundation. He holds a doctorate in AI and engineering from the University of Cambridge.

Voting History

| Statement | Response |

|---|---|

| General-purpose AI producers (e.g., companies like DeepSeek, OpenAI, Anthropic) can be held accountable for how their products are developed. Disagree | “While AI producers should be held accountable, many currently shield themselves through meticulously crafted terms of service that limit their liability. This creates a significant gap between ethical responsibility and legal accountability. Many companies include broad liability waivers, disclaiming responsibility for how their AI systems are used or any harm that might result from their deployment. AI systems are often provided “as is,” with no guarantees of accuracy, safety, or reliability, effectively shifting responsibility to users. While companies often publish AI ethics guidelines, these are typically voluntary and unenforceable, allowing them to appear responsible without facing legal consequences.” |

| There is sufficient international alignment on emerging codes of conduct and standards for global companies to effectively implement RAI requirements across the organization. Disagree | “I disagree, as there is not enough international alignment on responsible AI codes of conduct to enable global companies to effectively implement these requirements. While frameworks and guidelines exist, they are often region-specific and vary in scope and enforcement. This creates a fragmented landscape with conflicting regulations across jurisdictions. The implementation of ethical principles, such as fairness and transparency, also differs significantly across regions and industries, making it difficult for organizations to develop a uniform global approach.” |

| Companies should be required to make disclosures about the use of AI in their products and offerings to customers. Strongly agree | “Companies should disclose their use of AI in their products and offerings to customers for transparency, trust, informed consent, accountability, ethical considerations, and consumer protection. Disclosures help companies comply with laws and regulations, ensure ethical practices, and encourage investment in robust, ethical, and reliable AI systems.” |

| Organizations will be ready to meet the requirements of the EU AI Act as they phase in over the next 12 months. Disagree | “Organizations are still driven by profit motives. The EU AI Act is an important milestone. However, some of the requirements of the act, such as transparency, are either expensive or the technology is not fully developed. For example, how do you make generative AI transparent?” |

| Organizations are sufficiently expanding risk management capabilities to address AI-related risks. Disagree | “The maximization of profit through AI is more incentivized than addressing AI-related risks. We need to design regulatory mechanisms that will incentivize addressing AI risks.” |

| As the business community becomes more aware of AI’s risks, companies are making adequate investments in RAI. Disagree | “The nature of companies is that they are driven by profit motive. It is important that these companies incorporate a culture of sustainability into their ways of doing business. I think about 20% of the companies aware of AI risks are investing in RAI programs. The rest are more concerned about how they can use AI to maximize their profits.” |

| The management of RAI should be centralized in a specific function (versus decentralized across multiple functions and business units). Neither agree nor disagree | “The management of RAI requires both centralization and decentralization. For example, the responsible use of data should be centralized. In contrast, the use of AI in specific, localized contexts should be decentralized into units that better understand the issue at hand.” |

| Most RAI programs are unprepared to address the risks of new generative AI tools. Agree | “Even though there are many features of generative AI that are similar to classical AI’s, such as the use of data and neural networks, there are aspects that we should pay attention to. For example, what other things can we generate? Can we generate an entire world based on falsehoods, and what will this mean for humanity? What will this technology mean for education? Do we continue educating as we have been doing for the past 100 years, or do we craft an entirely new education system? What does generative AI mean for the whole creative industry, and what is the role of people in this new value chain? What will generative AI mean for the labor market, and what will this mean for the future of work? Many of these questions are valid in classical AI, but they become more valid in generative AI.” |

| RAI programs effectively address the risks of third-party AI tools. Disagree | “The way AI is implemented today involves integrating tools developed by many different agencies. Very often, these tools are used as they are. The question that one has to bear in mind is whether it is possible to create a regime where these tools are certified to be responsible. This will require minimum standards that do not currently exist. So we hope, for example, that a deep learning system uses an optimization algorithm that is responsible. We need to change the paradigm and ensure that when these tools are developed, the standard requirement is to always ask whether the tools we are developing are responsible. We need to ensure that this is audited. Will this slow down the development process? Yes, it will, but this is a fair price to pay to get AI that is responsible.” |

| Executives usually think of RAI as a technology issue. Disagree | “Executives tend to think of RAI as a distraction. In fact, many of them know very little about RAI and are preoccupied with profit maximization. Until RAI finds its way toward regulations, it will remain in the periphery.” |

| Mature RAI programs minimize AI system failures. Agree | “RAI is based on a set of specifications, procedures, and guidelines to develop trustworthy AI systems. If we consider that the objective of RAI is trustworthiness based on the principles of accountability, justice, transparency, and responsibility, then it stands to reason that systems programmed in this manner are less susceptible to system failures. RAI also implies responsible use of AI systems, which bolsters this argument. While this does aid in creating more responsible and ethical systems, there is scope for a more standardized approach to RAI guidelines to ensure conformity in standards, which would go a long way toward ensuring that there is a systematic and blanket approach to any challenges that may arise.” |

| RAI constrains AI-related innovation. Strongly disagree |

“It is important to note that we are seeing incredible instances of innovation with AI, which is addressing our health care concerns, bridging our linguistic and cultural gaps, and laying down the foundations for a more equitable world. That is not to say that there is no room for the misuse of AI. We have already seen instances of this with harmful biases present in some AI machines.

In 2015, dozens of AI experts signed an open letter that warned that “we cannot predict what we might achieve when this intelligence is magnified by the tools AI may provide.” With the rapid and far-reaching impact of AI, there is a corresponding exigency for explorations of ethics, justice, fairness, equity, and equality. A focus on regulation, ethics, and cultural aspects of the internet is key, not only to create an enabling policy environment to support private and nongovernmental organizations as well as the state, but to ensure ethical and transparent use of these new technologies. This does not hamper innovation holistically but addresses concerns around harmful innovation.” |

| Organizations should tie their responsible AI efforts to their corporate social responsibility efforts. Strongly agree | “Responsible AI is in many ways a human rights issue. When AI harms people, even when it is creating financial value to the shareholders, we should be concerned. As we embed AI in all aspects of organizations, it is important to continuously investigate the cost-benefit consequences. Naturally, the costs should include how responsible the deployed AI is. AI algorithms often arrive at organizations neutral and become biased based on the data they gain while they are used. It is therefore important to always keep in mind that much of the work to ensure responsible AI is done by people in organizations, and therefore responsible AI should become part of the culture in organizations.” |

| Responsible AI should be a part of the top management agenda. Strongly agree | “Responsible AI is about the protection of people and remaining within the bounds of the law, so it is important.” |