Why Top Management Should Focus on Responsible AI

Topics

Responsible AI

In collaboration with

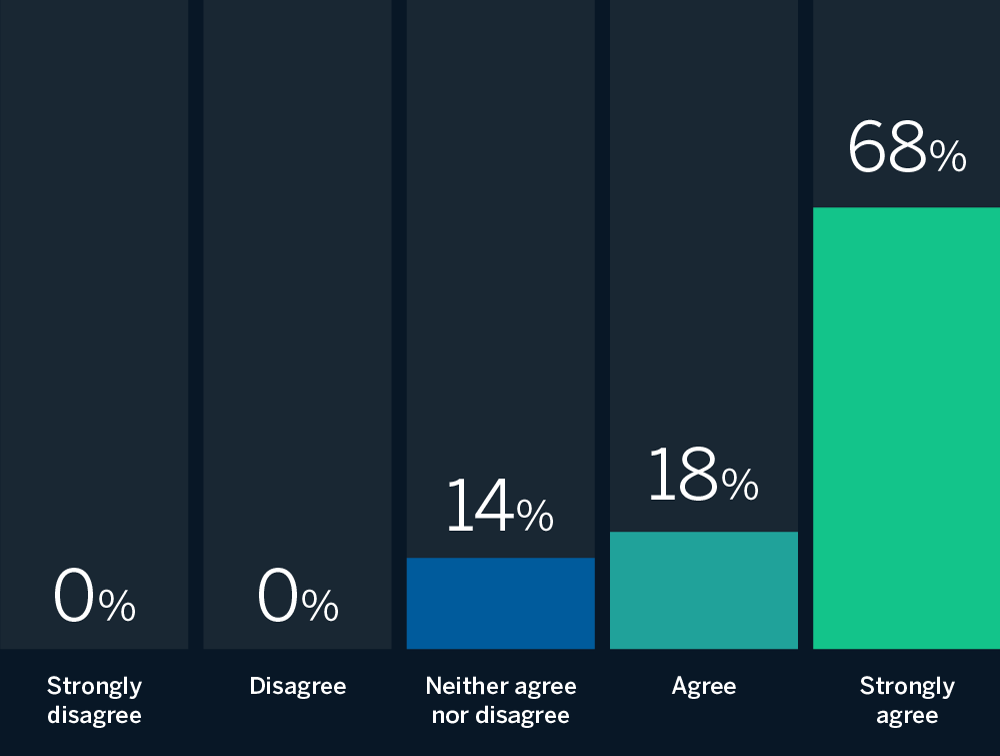

BCGMIT Sloan Management Review and BCG have assembled an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide. This month’s question for our panelists: Should RAI be a top management agenda item at organizations across industries and geographies?1 Eighty-six percent of them (18 out of 21) agree or strongly agree that it should be. In aggregate, their replies offer a compelling rationale for top management to oversee RAI efforts. We distill and explain this rationale below.

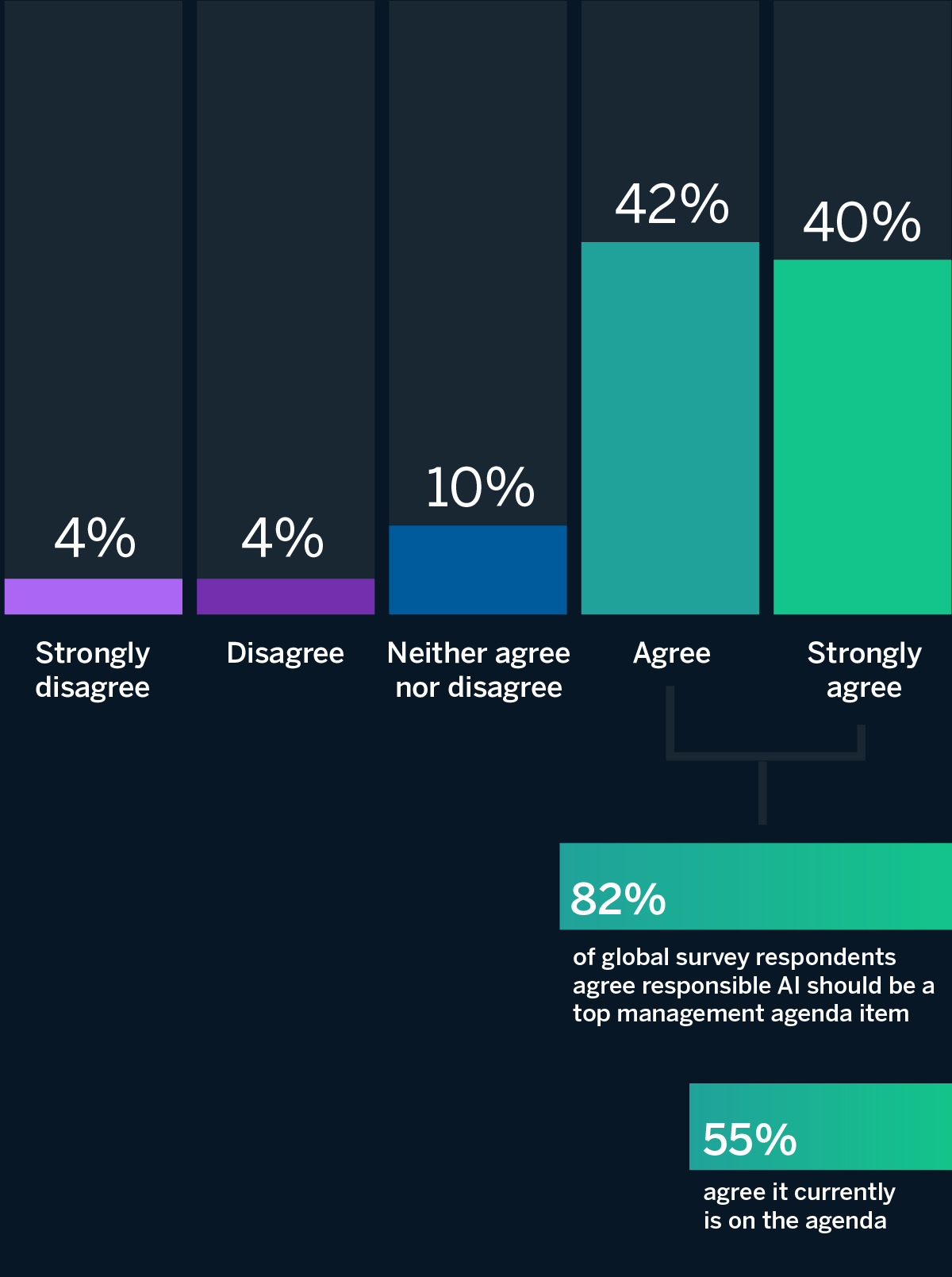

We also conducted a global survey of more than 1,000 executives that generated similar findings: Eighty-two percent of managers in companies with at least $100 million in annual revenues agree or strongly agree that RAI should be part of their company’s top management agenda. Unfortunately, only half of the respondents in that same survey reported that RAI is in fact on their top management’s agenda — a dramatic gap between expectations and reality.

Below, we share some of the insights of our RAI panelists. Then, drawing on our panelist responses and our own experience working with RAI initiatives, we offer three practical steps toward making RAI a top management agenda item.

The Panelists Respond

Responsible AI should be a part of the top management agenda.

Eighty-six percent of our expert panelists agree responsible AI should be a top management agenda item.

Source: Responsible AI panel of 22 experts on artificial intelligence strategy.

Responses from the 2022 Global Executive Survey

While 82% of global survey respondents agree responsible AI should be a top management agenda item, only 55% agree it currently is.

Source: MIT SMR survey data excluding respondents from Africa and China combined with Africa and China supplement data fielded in-country; n=1,202.

Reasons to Focus on Responsible AI

A strong majority of our panelists agree that RAI should be a top management concern. But their reasons — or what they chose to emphasize — represent two distinct sets of concerns. One set relates to how a company’s use of AI affects external stakeholders, such as customers and society, as part of its broader corporate strategy and social purpose. The other set is focused on internal stakeholders and regards the support of company leadership and management as vital to effective RAI efforts.

These two sets of reasons echo the distinction between strategy for and with AI. A strategy for AI is different from a corporate strategy with AI: As David Kiron and Michael Schrage explain in a 2019 MIT SMR article, “Strategies for novel capabilities demand different managerial skills and emphases than strategies with them.” Ideally, responsible AI should be connected to both a strategy for and with AI, with a focus on both internal and external stakeholders. Several panelists explicitly acknowledge this connection in their remarks.

Focus on External Stakeholders

Executives are increasingly discussing their company’s social purpose in nonfinancial terms. They recognize that AI has the potential to advance or undermine an organization’s social purpose. Some panelists make a point of emphasizing that RAI should be a top management concern because AI can have a significant impact on many external stakeholders. Jaya Kolhatkar, chief data officer at Hulu and executive vice president of data for Disney Streaming, offers a case in point: “Given that our storytelling has the power to shape views and impact society, we need to ensure that our use of AI represents both our content and the audience inclusively.” Slawek Kierner, senior vice president of digital health and analytics at Humana, sees the company’s use of AI as having a large impact on human life by improving health outcomes and reducing costs. He describes top leadership’s focus on RAI as “fundamental” to delivering trustworthy AI solutions that patients and clinicians are willing to adopt.

Strongly agree

“Artificial intelligence is already reshaping our society and world. In the workplace, AI is powerful; it can augment and extend the capabilities of employees, enhance human decisions, and increase productivity. As we navigate increasing complexity and the unknowns of an AI-powered future, establishing a clear ethical framework isn’t optional. It’s vital for its future.”

Focus on Internal Stakeholders

Whatever a company chooses to do with AI inside the organization — whether it’s improving productivity and product design or reducing costs — its strategy for AI depends on having responsible AI practices in place, several panelists observe. Linda Leopold, head of responsible AI and data at H&M Group, offers a clear statement to that effect: “Responsible AI must be seen as an integrated part of the AI strategy, not as an add-on or an afterthought.” IBM AI Ethics global leader Francesca Rossi adds, “Any company building, using, or deploying AI should make sure that AI systems are not only accurate but also trustworthy (fair, robust, explainable, etc.). … A responsible AI strategy needs to be defined and be used to support the whole AI ecosystem in the entire company.”

Top management can play a key role in ensuring that these RAI practices are broadly embraced and implemented as part of the company’s strategy for AI. Steven Vosloo, digital policy specialist in UNICEF’s Office of Global Insight and Policy, contends that “it is not enough to expect product managers and software developers to make difficult decisions around the responsible design of AI systems when they are under constant pressure to deliver on corporate metrics. They need a clear message from top management on where the company’s priorities lie and that they have support to implement AI responsibly.” Belona Sonna, a Ph.D. candidate in the Humanising Machine Intelligence program at the Australian National University, adds, “The decision to subscribe to responsible AI must be carried out by the management team for its implementation to be effective.”

Focusing on Both Internal and External Stakeholders

Some panelists explicitly recognize that responsible AI is essential to being a responsible business, both operationally and strategically. For example, Katia Walsh, chief global strategy and AI officer at Levi Strauss & Co., observes, “Every company is now a technology company, and building a responsible AI framework from the start is critical to running a responsible business. Data, digital, and AI are core to how companies connect with consumers, drive internal operations, and chart future strategies.” Richard Benjamins, chief AI and data strategist at Telefónica, specifically emphasizes a company’s social responsibility. “Companies that make extensive use of artificial intelligence, either for internal use or for offering products to the market, should put responsible AI on their top management agendas,” he says. “For such companies, it is important to monitor — on a continuous basis — the potential social and ethical impacts of the systems that use AI on people or societies.” As companies integrate AI into their operations and corporate strategies, top management should prioritize and bolster responsible AI efforts.

Strongly agree

“Top management seeking to realize the long-term opportunity of artificial intelligence for their organizations will benefit from a holistic corporate strategy under its direct and regular supervision. Failure to do so will result in a patchwork of initiatives and expenditures, longer time to production, damages that could have been prevented, reputational damages, and, ultimately, opportunity costs in an increasingly competitive marketplace that views responsible AI as both a critical enabler and an expression of corporate values.”

Cautions

Roughly a sixth of our panelists (14%) neither agree nor disagree that RAI should be a top management issue. They offer several reasons for why top management’s focus on RAI is not enough. Carnegie Mellon associate professor of business ethics Tae Wan Kim observes that top management is fallible: “We need more evidence about whether having responsible AI as part of the top management agenda makes a positive or negative impact.” He cites Google’s firing of a prominent AI ethicist, Timnit Gebru, as an example of top management’s troubling decision-making on issues related to RAI. Are leaders themselves buying into the cultural transformation necessary to adopt AI and RAI? H&M’s Leopold cautions that companies need grassroots buy-in from front-line employees just as much as they need top management attention. Some AI applications do not merit top management’s attention, asserts Brian Yutko, vice president and chief engineer for sustainability and future mobility at Boeing. “Some applications need significantly more senior leadership oversight than others,” he notes.

David R. Hardoon, chief data and AI officer at UnionBank of the Philippines, invites the question: How does RAI fit with a company’s broader goals for being a responsible business? For example, is top management intentionally connecting RAI with its corporate social responsibility efforts, in terms of governance, methods, and processes?

Neither agree nor disagree

“The instinctive and seemingly obvious answer is ‘yes,’ obviously. I nonetheless hesitate with the instinctive and straightforward ‘yes,’ as I believe it is important to first understand the broader approach that an organization takes toward the traits of responsibility.”

Bridging the Gap

With these cautions in mind, leaders can take the following three steps to put AI on their top management agendas:

- Educate top management. It’s easy to say the right things about the importance of responsible AI. It’s also easy to recognize the importance of making such statements. But understanding why RAI is a material concern to corporate strategy may require education. Without that education, RAI may not make it onto the top management agenda, or stay there.

- Assess whether RAI is part of your strategy for AI or part of a larger discussion of corporate goals, including corporate social responsibility. If top management views RAI as a strictly operational issue, it may not make it onto the top management agenda.

- Encourage urgency. If using AI is on the top management agenda, RAI should be as well. Waiting for AI to gain traction in your enterprise and then adding RAI after the fact invites risky behaviors and outcomes. To avoid those issues, as many panelists note, it’s better to regard RAI as a top management issue earlier rather than later.

References

1. We define the top management agenda as a small list of named strategic priorities or a regular agenda item at leadership meetings.

Comment (1)

Octavio Ramirez Rojas