Navigating the Technology Landscape of Innovation

Every organization has its strengths — and weaknesses. To emphasize the former while minimizing the latter, companies often devote considerable resources to their corporate strategies, for example, crafting the perfect plan to outmaneuver the competition in an emerging market. At the same time, those same companies may give short shrift to the essential task of determining exactly what their strategy for product innovation should be. The result: R&D projects that are out-of-synch with the rest of the organization.

Developing the right strategy for product innovation is hardly a simple matter. In fact, it is a complex undertaking that first requires a fundamental understanding of how technical modularity affects R&D efforts. In a modular design, a change in one component of a product (the heating element of a coffeemaker, for instance) has relatively little influence on the performance of other parts or the system as a whole. In a nonmodular, or “coupled,” design, the components are highly interdependent, and the result is nonlinear behavior: A minor change in one part can cause an unexpectedly huge difference in the functioning of the overall system. With semiconductors, for instance, a minuscule impurity (just 10 parts in a billion) can dramatically alter silicon’s resistance by a factor of more than 10,000. Generally speaking, modular designs make R&D more predictable, but they tend to result in incremental product improvements instead of important advances. Coupled designs, on the other hand, are riskier to work with, but they are more likely to lead to breakthroughs.

This trade-off between predictability and innovation can be visualized as a “technology landscape,” with gently sloping hills corresponding to incremental product improvements that are based on modular components and soaring, craggy peaks representing breakthrough inventions that rely on tightly coupled parts. Developing new products requires a search across such technology terrain. For a company like Dell Computer that can compete on its efficient manufacturing and superb supply-chain management, avoiding the rugged peaks and instead traversing the sloping hills is an effective strategy. Other corporations like Apple Computer need to scale the high peaks to maintain their competitive advantage. For such expeditions, one approach is to minimize risk by developing a “map” of the topography — that is, by gaining an understanding of the underlying science of the technologies being used.

The concepts of “technology landscapes” and “maps” serve as powerful metaphors for understanding why some companies have profited from their R&D efforts whereas others have stumbled. Indeed, the framework is a valuable tool for helping organizations make important decisions about their innovation processes and resource allocation. For instance, a firm that is having great difficulty moving products through R&D into manufacturing could be exploring an area of the technology landscape that is too rugged. Instead, the company might try to work in less precarious terrain by using more modular components (perhaps standardized parts). Or it might greatly benefit from a large investment in basic science to develop a map of the landscape that will help researchers avoid technological pitfalls. By addressing such issues, an organization can ensure that its strategy for product innovation is best suited to its competitive strengths.

Technology Landscapes

We start with a simple and classic idea: Inventions combine components — whether they be simple objects, particular practices or steps in a manufacturing process — in new and useful ways. An inventor can create novel products either by rearranging and refining existing components or by working with new sets of them. For example, the steamship unites the steam engine with the sailing ship. The automobile merges the bicycle, the horse carriage and the internal combustion engine. DNA microarrays — devices that enable scientists to investigate the efficacy or toxicity of a trial drug — rely on semiconductors, fluorescent dye and nucleotides. And the emerging field of nanomanufacturing combines techniques from the semiconductor, mechanical and biotech industries.

In a technology landscape, the summits correspond to inventions that have successfully merged different components, and the valleys represent failed combinations. (The concept of technology landscapes was tested in an extensive study of more than 200 years of data from the U.S. Patent Office. For details, see “About the Research.”) Metaphorically speaking, inventors seek out the peaks on this landscape, while trying to avoid the chasms in between.

When researchers do not understand the components they are working with nor how those parts interact, they search blindly in a fog, unable to see the surrounding peaks and valleys. Because of this, the researchers (and their managers) prefer cautious forays, that is, small adjustments to proven concepts — a process known as local, or incremental, search. Such an approach is attractive because researchers know from experience that the minor enhancements will likely work and receive at least reasonable acceptance in the market. And local searching works particularly well on terrain dominated by a single peak like Mt. Fuji. For such a landscape, inventors simply need to travel uphill to discover the next invention. A disadvantage here, though, is that even the person who is first to reach the top gains, at best, a fleeting technological advantage over competitors.

Now consider a different landscape like the Alps that is characterized by a multitude of crests. Here, inventors searching in the fog will miss most of the great inventions because they are situated beyond an abyss of technological dead ends. Proceeding slowly uphill will typically leave people stranded on some local hill far below the soaring heights of a Mt. Blanc. And once stranded on a local peak, any direction they move will lead downhill. Because of the fog, they cannot see the heights beyond the neighboring valleys so they do not know in which direction to head or whether to quit altogether. Thus, on such rugged landscapes, local searching will almost certainly fail, even after enormous investments of time and effort. Instead, such explorers need some sort of map — even an approximate one. With it, they could anticipate the rough terrain and decide how best to reach a summit. Having finally arrived there, they would have gained a strong technological advantage over rivals, particularly those that lack a map.

Obviously, exploring rugged terrain (that is, working with tightly coupled systems) is an unpredictable and risky undertaking. But, as mentioned earlier, the payoffs can be huge for those who make landmark discoveries that others have difficulty replicating. That said, trying to achieve those breakthroughs can be a massive endeavor that not every organization should attempt. Indeed, many companies are better suited for exploring less rugged terrain (that is, working with modular components).

Smooth Landscapes With Sloping Mountains

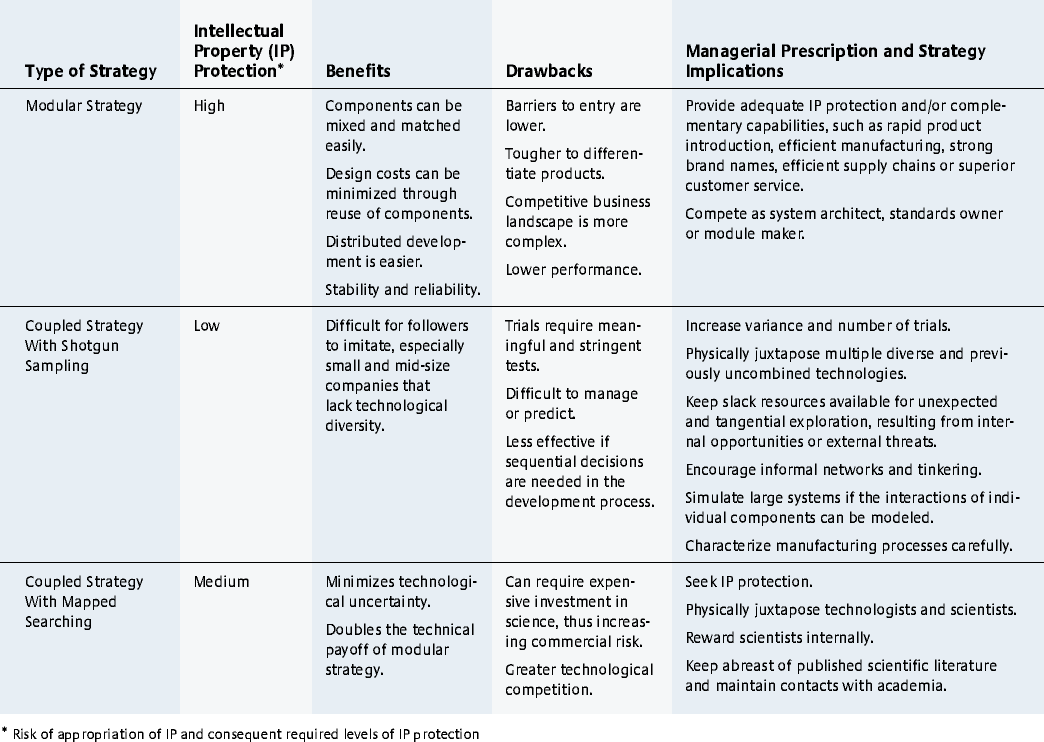

A modular recombination strategy offers numerous benefits.1 (See “Summary of Strategies for Product Innovation.”) It reduces design costs and expenditure of time because people can reuse proven components to quickly build complex systems that work. Furthermore, because the components have little interaction, companies can deploy efficient distributed-development approaches in which various teams of researchers work in parallel on the components.

Summary Of Strategies For Product Innovation

Exploring a smoother technology landscape, however, has its drawbacks. Although modular recombination decreases the risk of a failed invention, it also limits the upside potential because blockbuster innovations become far less likely. Also, modular systems take a performance hit because they cannot fully exploit the potential synergies among components. For example, in computer workstations, Sun Microsystems was able to develop working systems relatively easily because of its use of modular microprocessors, but for years the computational power of the company’s workstations lagged those of Apollo Computer, which relied on customized (and more coupled) designs. Eventually, though, Sun eclipsed Apollo as the latter found it difficult to sustain its pace of innovation.

Excessive modularity also makes it difficult to maintain a competitive advantage. Because highly modular components combine easily, rivals can easily copy a modular product. As this technical know-how spreads, the industry can become commodity-driven, resulting in a low barrier to entry, numerous competitors and little differentiation among them. The personal computer market is a case in point. Ironically, as the technology landscape of innovation becomes simpler to navigate, the competitive landscape can become treacherously complex.

Competing on a smooth technology landscape thus requires strong legal protection of intellectual property, complementary capabilities (low-cost manufacturing, for instance) or a combination of both. Patents can provide some security from copycat companies, but it typically erodes as the technology matures and outsiders learn how to design around the patents. Without a strategy for safeguarding its intellectual property, a firm must rely on complementary strengths, such as fast product development, lean manufacturing or superior customer service. Dell, for instance, benefits not from revolutionary product development but through exemplary supply-chain management and strong execution of its business model. Those strengths enable the company to succeed in a market in which standard interfaces and protocols have allowed even consumers to build their own PCs by mixing and matching parts (monitors, microprocessors, disk drives and so on).

Another example of a successful modular approach is the Walkman. To develop that landmark product, Sony engineers first built a library of standard, interchangeable components. Doing so shifted its product search to a smooth landscape, allowing it to find useful combinations with relative ease. Sony was able to maintain its competitive advantage not because its initial position was unassailable, but because the company was always able to stay at least one step ahead of the competition. Modular recombination enabled rapid innovation, allowing Sony to inundate the market continually with product changes and improvements, making it difficult for competitors to keep pace. Sony also had the manufacturing expertise and marketing muscle to keep rivals at bay. The success of the Walkman also holds another important lesson: Although modular designs tend to result in incremental product improvements, they can sometimes result in a series of inventions that become commercial blockbusters.

To pursue a modular strategy for product innovation, a company must first direct its R&D group to use existing standards, particularly if they are widely accepted. If standards have yet to emerge, engineers should develop their own standardized interfaces and protocols before embarking on a new product design. And after establishing such proprietary standards, the organization should actively pursue their widespread adoption in the industry, especially if competing standards are emerging. One effective tactic is to outsource any manufacturing of the standardized components to encourage suppliers to develop parts that they can sell to other companies, thus accelerating the proliferation of the standards throughout the industry. (Incidentally, Sun Microsystems pursued exactly this approach to outmaneuver Apollo Computer.)

The structure of an R&D group can also be a major factor. To reinforce modular-design principles, companies should allocate product development responsibilities to independent teams, each dealing with its own component of the system. This approach of distributed development can subtly motivate researchers and engineers to adopt standards and pursue modular designs. Otherwise, they will struggle with great inefficiencies as the components they develop have trouble working with the parts that other teams have built.

Rugged Landscapes With Craggy Peaks

As noted earlier, a coupled strategy for product innovation is a high-risk/high-return endeavor. Companies that are fortunate enough to succeed with such an approach will occupy an almost ideal position. Discoveries from this process typically involve much tacit knowledge, and a competitor will find it difficult to replicate those results because they will likely require an understanding of the underlying principles. Hence, intellectual property protection and complementary capabilities matter less than they do for a modular strategy. (Indeed, keeping an invention secret might protect it more effectively than a patent, because the patent application process requires disclosure of technical details.) To pursue coupled designs, however, companies need an effective strategy for dealing with the daunting complexity of rugged technology landscapes.

Shotgun sampling.

One approach is to generate an enormous number of random trials and then subject those to rigorous selection criteria. Instead of understanding the interdependencies among components that generate the landscape, inventors simply apply the magic of large numbers in a manner similar to the evolutionary process of natural selection. The successful implementation of this strategy of shotgun sampling requires two things: methods to generate variation cheaply and accurate tests to assess the value of those variations.

Thomas Edison’s laboratory in Menlo Park, New Jersey, one of the most successful research facilities in history, operated under the principles of shotgun sampling. Edison brought together a host of gadgets, chemicals, compounds and technologies under one roof and staffed his inventor’s dream house with a wide variety of technical and craft professionals. The layout of his lab further facilitated rapid shotgun sampling by juxtaposing seemingly unrelated technologies in the same work area and by encouraging his staff to search far and wide for strange combinations. In developing his most famous invention, the light bulb, Edison tried some 1,000 myriad combinations of filament materials, vacuum pressures, voltages, bulb shapes and so on, running each against an obvious and rigorous test: How long does the bulb stay lit?

In today’s world, computer simulation offers a powerful tool for improving the efficacy and reducing the costs of running myriad trial-and-error tests. Inventors can take relatively inexpensive, virtual samples of large swathes of the technology landscape instead of having to perform costly physical trials. BMW, for instance, builds computer models of prototype cars and then “crashes” them in virtual simulations. Through this work, the company has been able to investigate new designs for improving the safety of its vehicles. BMW then builds physical prototypes to verify the final designs.2 To be effective, computer simulation requires an accurate means of modeling the interactions between individual components as well as reliable tests to determine which designs are superior.

The real payoff comes when engineers simply cannot manually predict the complex behavior of a system that emerges from the interactions of thousands or millions of components. For example, the success of microprocessors based on reduced instruction set computing (RISC) in the 1980s hinged on the use of computer simulation — a massive research undertaking that required the efforts of Hewlett-Packard, IBM, MIPS, Stanford University and the University of California at Berkeley. Engineers had to simulate the effects of increasing a microprocessor’s cache memory size or of implementing certain instructions in hardware, because they could not predict how such changes would affect the chip’s ability to run various complex software programs. As a result of this work, the annual rate of improvement in microprocessors increased from 35% to 55%.

An organization that performs shotgun sampling across a rugged technology landscape can deploy a variety of techniques to increase its odds of success. First and foremost, it can work with many diverse technologies, and it can physically juxtapose researchers from seemingly unrelated fields to ensure that inventors are not straitjacketed into technological and functional silos. It can also promote a relatively unstructured workplace. For example, 3M’s policy of allowing its researchers to spend one day per week on their own projects encourages them to pursue random and unexpected tangents. A company also needs to be constantly on the lookout for unexpected breakthroughs, even if they appear far from the original target. Lastly, before a company releases the results of a shotgun-sampling strategy into production, it must ensure that its manufacturing process is reliable over a wide range of parameters. One caveat: If people do not understand how a technology works in the lab, identifying the root cause of problems in manufacturing will prove nearly impossible, as has been the case with many semiconductor companies and their fabrication facilities.

A crucial point to remember is that shotgun sampling requires clear and stringent test criteria. Otherwise, the process could result in terrible inefficiencies and frustrated researchers. In the pharmaceutical industry, for example, researchers face daunting challenges because of the interactions among a drug molecule, the system used to deliver that compound to its target, and the idiosyncratic phenotype of each individual patient. Rather than attempting to understand all of those intricate, coupled interactions, Merck and other pharmaceutical firms instead have developed methods to test huge arrays of random combinations of potential drugs simultaneously against multiple targets. Such targets could include, for example, leptin receptors for obesity, complement receptors for inflammation and interleukin for allergies. The companies increase the variance of their trials by constantly seeking out new compounds to expand their libraries. But this approach has been slow to yield new drugs, partly because it lacks meaningful and effective tests. Researchers often have a limited understanding of the target diseases and must represent them by using simple cell-based assays that do not model accurately how a potential drug will work in humans. Because of that, companies often do not learn that a certain drug is a failure until much later, in expensive clinical trials. Thus, in summary, shotgun sampling works best when a company has lots of cheap ammunition, multiple targets and clear signals indicating that one of those targets has been hit.

Mapped searching.

Rather than perform shotgun sampling, a company can instead obtain a fundamental understanding of the different coupled components (and their associated technologies), including how certain parts affect one another. With such knowledge, researchers will be able to narrow their field of exploration and arrive at useful inventions more quickly. This approach is analogous to “mapping” the technology landscape. Typically, although such a strategy requires an expensive and long-term investment in basic or applied science, the potential payoffs are huge. Research on patent citations demonstrates that this approach generates inventions of twice the import, on average, than the modular recombination strategy.3 Translating this into financial rewards promises an even greater payoff because the commercial value of patents that are highly cited increases exponentially. Specifically, recent work on breakthrough innovations has found that a patent with 14 citations has 100 times the value of a patent with eight citations.4

An important point to remember is that companies don’t necessarily have to conduct basic research to benefit from it. Simple awareness of and familiarity with the scientific literature can provide a powerful advantage when dealing with highly coupled technologies. Alternatively, companies can pool their risk by participating in an industrial consortium like Sematech, in which member firms (Intel, Motorola, IBM, Texas Instruments and others) jointly develop key semiconductor manufacturing technologies. Such approaches are particularly crucial for small firms that do not typically have the substantial resources necessary to undertake a mapped search on their own. In addition, smaller companies and startups can greatly extend their in-house knowledge by building an informal outside network of scientists across a variety of fields, perhaps by locating close to universities and then establishing relationships with the researchers there.5 Whatever the means, providing researchers with even an inexact map of their rugged technological landscape can pay off handsomely as long as the information is accurate enough for finding valuable inventions more quickly.

The power of mapped searching is amply illustrated by H-P’s invention of the inkjet printer. Its legendary dominance of the inkjet market makes it difficult to imagine that the company struggled greatly to develop the technology, eventually succeeding where legions had failed. In fact, the basic concept of inkjet printing was first published more than a century ago by Lord Kelvin in the 1867 Proceedings of the Royal Society. Since then, IBM, Sperry Rand, Stanford University and Xerox each invested millions of dollars in the technology, yet the resulting products consisted of fragile parts and operational sensitivities that defied reliable operation. The inkjet printer challenged inventors tremendously because it involved so much coupling among the different components, including the chemistry of the ink, the physical layout of the resistors providing the heat pulse to spray the ink, the shape of the electrical signal through the resistors and so on.

John Vaught, a technician at H-P labs, finally succeeded in putting together the winning combination of intricate components that led to the company’s blockbuster product — an achievement made possible only through a basic understanding of the complex physics of superheated liquids.6 Vaught’s story holds several lessons. First, the scientific literature can provide a wealth of valuable information. After Vaught made an initial breakthrough with microjets, his H-P colleagues Howard Taub and John Meyers read the appropriate physics journals to gain from others’ knowledge of bubble nucleation, the phenomenon that is the basis for all inkjet printers. Second, researchers can tap into their personal network of colleagues for access to the necessary expertise. Taub and Meyers contacted their friends at the California Institute of Technology to gain the latest information on microjets and high-temperature vaporization. Third, scientifically capable inventors then can do the remainder of the necessary basic research. Meyers and his colleagues devised original simulations and numerical techniques to understand how best to apply the mechanism of bubble nucleation to the specific context of their invention. This work helped map H-P’s technology landscape, enabling the company to understand how nucleation occurs in ink that is heated to extremely high temperatures.

A technology map can be particularly useful for companies that have hit a dead end but don’t yet realize it. Consider the case of Lord Corporation, a Cary, North Carolina, company that manufactures adhesives and dampers. In the 1980s, Lord was trying to develop a “smart damper” that would be able to, for example, change its resistance to give the rider of an exercise bike the impression of climbing uphill. At the time, Lord (like most of its competitors) had been focusing on using “electro-rheological” (ER) fluids, which thicken (thus increasing their resistance) when an electric field is applied to them. The company struggled with ER materials until Dave Carlson, a company engineering fellow, did a crucial calculation showing that the technology, even at its best, was essentially impractical — an analysis that required a fundamental understanding of the chemistry and physics involved.

Given that realization, Lord quickly switched its search to investigate magneto-rheological (MR) materials, which are similar to ER fluids except that their damping qualities change when a magnetic (not electric) field is applied. This shift to a different region of the technology landscape proved fortuitous. Lord researchers experimented with a variety of MR materials and discovered that a particular oil with iron filings and additives seemed to work best. To investigate that finding, Lord returned to science and did original research on the breakdown of iron particles under mechanical and magnetic stress. This work has led to the development of a variety of products, including an earthquake damper for buildings and bridges, smart shock absorbers for cars and a prosthetic leg that provides dynamic resistance on the basis of the wearer’s weight, gait and physical surroundings (for example, whether the wearer is walking on a flat surface or climbing stairs).

A technology map is also invaluable for researchers who think they’re at a technological dead end but really aren’t. Indeed, scientific theories can encourage an ongoing search in the face of repeated failure. When David Wong of Eli Lilly was looking for a new drug to treat depression, he continued investigating a particular set of Benadryl compounds, even though that grouping had previously failed to show any promise. But Wong persisted because he had a theory of serotonin uptake — that is, an understanding of the mechanism by which certain brain cells release and absorb the powerful neural transmitter. Eventually, thanks to that knowledge, Wong discovered the antidepressant drug Prozac.

For all its advantages, though, mapped searching can be extremely tricky to implement. Specifically, companies that deploy mapped searching must pay special attention to how they manage their scientists. Engineers tend to seek career recognition within their firms and respond well to both compensation- and career-based incentives. Scientists, on the other hand, often seek professional recognition through external publication and respect within the wider scientific community. Recent evidence even suggests that scientists will accept lower salaries for the opportunity to achieve such external recognition.7 Keeping a scientist’s work proprietary, then, can prove difficult when that person measures career success by the number of articles published and the prestige of the journals in which they appear. Furthermore, the fundamental norms of science and the very basis for its success stem from open publication and discourse. Lastly, good science codifies and articulates knowledge. Thus, even if a firm’s scientists conduct their research without the rewards of external publication, the codification of their knowledge makes that information less tacit and hence more easily appropriated by other firms.8 For example, researchers who leave a company can more easily reconstruct their work for a rival.

These prickly issues are illustrated by IBM’s experience with its breakthrough on copper-interconnect technology.9 The invention, which enables the transistors on a chip to be connected at very low electrical resistances, was a major coup. When IBM announced it, the company’s stock price rose 5%, and when the technology was released into production, the stock price rose an additional 6%. Wisely, IBM allowed its researchers to publish only general ideas about the technology, keeping the specific details proprietary. And it shrewdly refrained from applying for patents to avoid disclosing that valuable information. During its downturn in the early 1990s, however, IBM suffered drastic cutbacks and, as a result, several researchers left the company. With this diffusion of knowledge, the rest of the industry gained a better grasp of the true advantages of copper-interconnect technology, and other semiconductor manufacturers increased their efforts in that area, catching up with IBM’s expertise within a couple of years. But in the chip industry, two years represents a generation in technology, enabling IBM to ship more than a million chips before its competitors could respond.

The main lesson of IBM’s success with its copper-interconnect innovation is that mapped searching across a rugged technology landscape can yield incredible returns. To reap those benefits, however, companies must actively manage and closely monitor their innovation strategies. Generally speaking, a mapped strategy requires greater intellectual property protection and stronger complementary capabilities than the approach of shotgun sampling. On the other hand, it also requires less IP protection and fewer complementary capabilities than a modular approach.

Finding the Right Ground

Companies that pursue a modular strategy to product innovation should find that their components mix and match easily with little impact on system performance. They should also be able to easily substitute raw materials, vendors and manufacturing processes. In contrast, organizations that choose to compete with a coupled strategy should find just the opposite. Their inventors should find it difficult to explain exactly why innovations work, why they only work in small “sweet spots,” or why a small change can crash an entire system.

An important point to remember is that industries are dynamic entities, so organizations need to continually reassess whether they are exploring the right technology landscapes. As a market matures, for example, a company that has built a strong brand might elect to focus on lowering its manufacturing costs, perhaps by using more standardized parts to develop its next generation of products. A complicating factor is that different technologies and projects at the same company can represent different landscapes, each requiring a tailored strategy.

To move to a more rugged landscape, a company should motivate its engineers to work with new and more-sensitive components — a move that many may initially resist not only because they prefer using what’s familiar but also because of the greater inherent frustrations of working with coupled technologies. Regardless, an organization can support the transition by giving researchers the time and resources to explore ideas that might at first seem impractical and by not penalizing them for a temporary reduction in productivity.

Going in the opposite direction — that is, moving to a smoother technology landscape — involves a relatively easier and more conventional managerial challenge. Companies that want to make this transition need to stress increased reliability, productivity and the reusability of components and designs. Of course, migrating to a modular strategy of product innovation only makes sense for companies that possess the complementary capabilities necessary to prosper in commodity-like markets.

Either type of transition across a technology landscape presents its share of challenges. But the crucial point is that companies are not passive bystanders; they can migrate across the terrain to find the topography that suits them best. And by doing so, they can capitalize on their strengths — and avoid having their competitors exploit their weaknesses.

References (9)

1. See also C. Baldwin and K. Clark, “Managing in an Age of Modularity,” Harvard Business Review 75 (September–October 1997): 84–95.

2. S. Thomke, “Enlightened Experimentation: The New Imperative for Innovation,” Harvard Business Review 79 (February 2001): 66–75.