Are Responsible AI Programs Ready for Generative AI? Experts Are Doubtful

A panel of experts weighs in on whether responsible AI programs effectively govern generative AI solutions such as ChatGPT.

Topics

Responsible AI

In collaboration with

BCGFor the second year in a row, MIT Sloan Management Review and Boston Consulting Group (BCG) have assembled an international panel of AI experts that includes academics and practitioners to help us understand how responsible artificial intelligence (RAI) is being implemented across organizations worldwide. This year, we’re examining the extent to which organizations are addressing risks that stem from the use of internally and externally developed AI tools. The first question we posed to our panelists was about third-party AI tools. This month, we’re digging deeper into the specific risks associated with generative AI, a set of algorithms that can use unvetted training data to generate content such as text, images, or audio that may seem realistic or factual but may be biased, inaccurate, or fictitious.

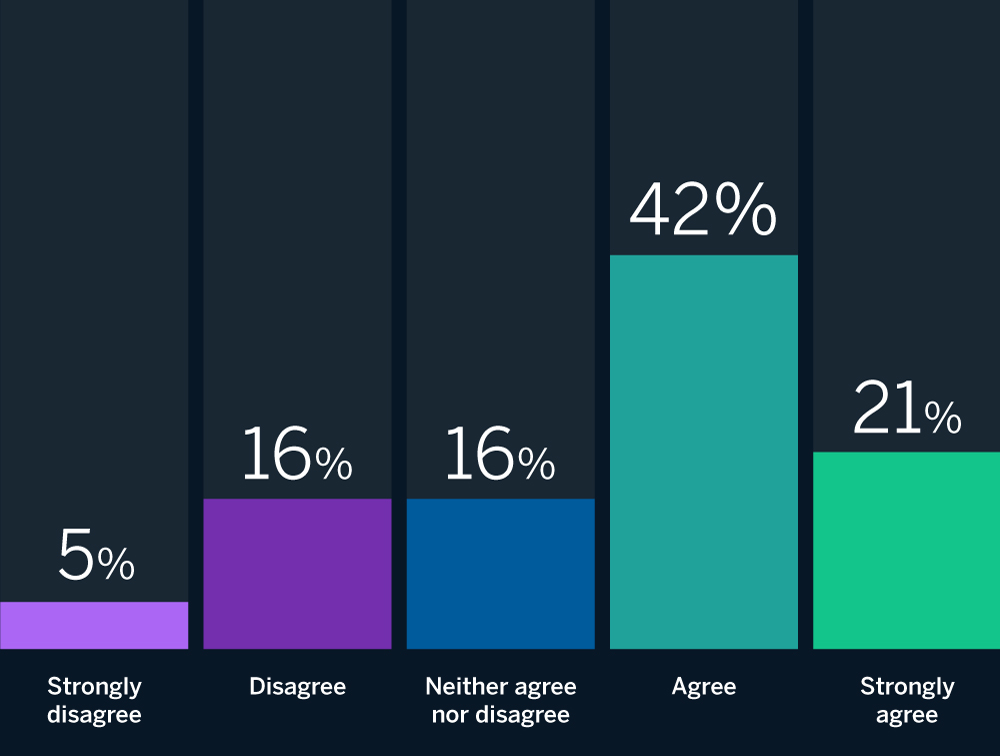

We found that a majority (63%) of our panelists agree or strongly agree with the following statement: Most RAI programs are unprepared to address the risks of new generative AI tools. That is, RAI programs are clearly struggling to address the potential negative consequences of using generative AI. But many of our experts asserted that an approach that emphasizes the core RAI principles embedded in an organization’s DNA, coupled with an RAI program that is continually adapting to address new and evolving risks, can help. Based on insights from our panelists and drawing on our own observations and experience in this field, we offer some recommendations on how organizations can start to address the risks posed by the sudden, rapid adoption of powerful generative AI tools.

The Panelists Respond

Most RAI programs are unprepared to address the risks of new generative AI tools.

Most panelists agree that RAI programs are unprepared to address the organizational risks posed by generative AI.

Source: Responsible AI panel of 19 experts in artificial intelligence strategy.

Addressing the Risks in Practice

According to our experts, RAI programs are struggling to address the risks associated with generative AI in practice for at least three reasons. First, generative AI tools are qualitatively different from other AI tools. Jaya Kolhatkar, chief data officer at Hulu and executive vice president of data for Disney Streaming, observes that recent developments “showcase some of the more general-purpose AI tools that we may not have considered as part of an organization’s larger responsible AI initiative.” Given the technology’s general-purpose nature, adds Richard Benjamins, chief AI and data strategist at Telefónica, “an RAI program that evaluates the AI technology or algorithm without a specific use case in mind … is not appropriate for generative AI, since the ethical and social impact will depend on the specific use case.”

Strongly agree

Second, and related to its qualitatively different nature, generative AI introduces new risks. As Oarabile Mudongo, a policy specialist at the African Observatory on Responsible AI, explains, “The risks associated with generative AI tools may differ from those associated with other AI tools, such as prebuilt machine learning models or data analysis tools. … RAI programs that have not specifically addressed these risks may be unprepared to mitigate them.” Similarly, Salesforce’s chief ethical and humane use officer, Paula Goldman, argues, “Generative AI introduces new risks with higher stakes in the context of a global business. What makes this technology so unique is that we’re moving from classification and prediction of data to content creation — often using vast amounts of data to train foundation models.” This is not something that RAI programs are necessarily equipped to tackle.

Strongly agree

“New generative AI tools, like ChatGPT, Bing Chat, Bard, and GPT-4, are creating original images, sounds, and text by using machine learning algorithms that are trained on large amounts of data. Since RAI frameworks were not written to deal with the sudden, unimaginable number of risks that generative AI tools are introducing into society, the companies developing these tools need to take responsibility by adopting new AI ethics principles.”

Third, advancements in generative AI are outpacing RAI program maturation. For example, Philip Dawson, head of AI policy at Armilla AI, contends that “RAI programs that require the implementation of AI risk management frameworks are well positioned to help companies manage the risks associated with new generative AI tools from a process standpoint.” But he cautions that “most enterprises have yet to adapt their third-party risk management programs to the AI context and do not subject AI vendors or their products to risk assessments. Accordingly, enterprises are largely blind to the risks they are taking on when procuring third-party AI applications.” Similarly, Dataiku’s responsible AI lead, Triveni Gandhi, says, “As the pace of unregulated development increases, existing RAI programs may be unprepared in terms of the specialized tooling needed to understand and mitigate potential harm from new tools.”

The Evolution of RAI

Just as AI is advancing at an unprecedented pace, RAI programs must continually evolve to address new, and potentially unforeseeable, risks. Giuseppe Manai, cofounder, COO, and chief scientist at Stemly, cautions that “generative tools are evolving rapidly, and the full extent of their capabilities — present and future — is not fully known.” For that reason, Mudongo advises organizations to continually evolve their programs: “As with any AI tool, it is crucial to thoroughly assess and monitor the risks associated with generative AI tools and to adapt RAI programs accordingly. As new AI technologies continue to emerge and evolve, it is important for RAI programs to stay up to date and proactively manage risks to ensure responsible AI use.”

Neither agree nor disagree

“What we have seen lately is rapid technological development and new, powerful tools released with hardly any prior public debate about the risks, societal implications, and new ethical challenges that arise. We all need to figure this out as we go. In that sense, I believe most responsible AI programs are unprepared.”

Our experts largely agree that core RAI principles provide a foundation for addressing advances in generative AI and other AI-related technologies. Principles like trust, transparency, and governance apply equally to all AI tools, generative or not. As Ashley Casovan, the Responsible AI Institute’s executive director, puts it, “The same basic rules and principles apply: Understand the impact or harm of the systems you are seeking to deploy; identify appropriate mitigation measures, such as the implementation of standards; and set up governance and oversight to continuously monitor these systems throughout their life cycles.”

Similarly, Steven Vosloo, a digital policy specialist in UNICEF’s Office of Global Insight and Policy, observes, “The current principles of responsible AI apply to newly developed generative AI tools. For example, it is still necessary to aim for AI systems that are transparent and explainable.” And Gandhi asserts that “the key concepts in responsible AI — such as trust, privacy, safe deployment, and transparency — can actually mitigate some of the risks of new generative AI tools.”

Some experts recognize that the foundations for addressing generative AI are already in place in organizations with strong RAI practices. Linda Leopold, H&M Group’s head of responsible AI and data, says, “If you have a strong foundation in your responsible AI program, you should be somewhat prepared. The same ethical principles would still be applicable, even if they have to be complemented by more detailed guidance.” She adds that it will be easier “if the responsible AI program already has a strong focus on culture and communication.”

David Hardoon, CEO of Aboitiz Data Innovation and chief data and AI officer at Union Bank of the Philippines, also contends that “most RAI programs have the necessary foundations that cover the gamut of AI risks, generative or otherwise,” adding that “any ‘unpreparedness’ would arise from how these RAI programs are comprehensively implemented, operationalized, and enforced.”

Finally, Simon Chesterman, dean and the Provost’s Chair professor at the National University of Singapore Faculty of Law, argues, “Any RAI program that is unable to adapt to changing technologies wasn’t fit for purpose to begin with. The ethics and laws that underpin responsible AI should be, as far as possible, future-proof: able to accommodate changing tech and use cases.”

Recommendations

Our recommendations for organizations seeking to address the risks of generative AI tools through their RAI efforts are similar to those for mitigating the risks of third-party AI. Specifically, we recommend the following:

- Reinforce your RAI foundations, and commit to evolving over time. The fundamental concepts, principles, and tenets of RAI apply equally to all AI tools, generative or not. Therefore, the best starting point for addressing the risks of generative AI is ensuring that your RAI program, policies, and practices are built on solid ground. Do this by maturing your RAI program as much as possible to cover a broad, substantive scope and ensuring that it applies across the entire organization rather than on an ad hoc basis. Moreover, given AI’s pace of technological advancement, commit to RAI as an ongoing project and accept that the job is never done.

- Invest in additional education and awareness. In addition to having a strong foundational RAI program, organizations should invest in education and awareness-building around the unique nature of generative AI use cases and risks, including through employee training programs. Because these are general-purpose tools with a vast array of potential use cases, it is important to embed this understanding and awareness into the organizational culture.

- Keep track of your vendors. In this increasingly complex AI ecosystem, third-party tools and vendor solutions, including those based on generative AI, introduce material risks to an organization. To minimize these risks, incorporate strong vendor management practices — including ones that leverage any existing technical and legal benchmarks or standards — into the design and implementation of your RAI program. Consider, for example, preliminary risk assessments, bias measurements, and ongoing risk management practices. Do not assume that you can outsource liability when something goes wrong.

“Most RAI programs address the risks of traditional AI systems, which focus on detecting patterns, making decisions, honing analytics, classifying data, and detecting fraud. On the other hand, generative AI uses machine learning to process a vast amount of visual or textual data, much of which is scraped from unknown sources on the internet, and then determines what things are most likely to appear near other things. Due to the nature of data sets used in generative AI tools and potential biases in those data sets from unknown data sources, most RAI programs are unprepared to address the risks of new generative AI tools.”